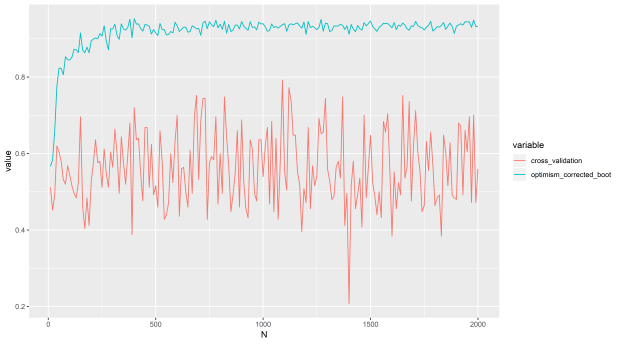

Part 6: How not to validate your model with optimism corrected bootstrapping

When evaluating a machine learning model if the same data is used to train and test the model this results in overfitting. So the model performs much better in predictive ability than it would if it was applied on completely new data, this is because the model uses random noise ...