Information Security: Anomaly Detection and Threat Hunting with Anomalize

Want to share your content on R-bloggers? click here if you have a blog, or here if you don't.

Information Security (InfoSec) is critical to a business. For those new to InfoSec, it is the state of being protected against the unauthorized use of information, especially electronic data. A single malicious threat can cause massive damage to a firm, large or small. It’s this reason when I (Matt Dancho) saw Russ McRee’s article, “Anomaly Detection & Threat Hunting with Anomalize”, that I asked him to repost on the Business Science blog. In his article, Russ speaks to use of our new R package, anomalize, as a way to detect threats (aka “threat hunting”). Russ is Group Program Manager of the Blue Team (the internal security team that defends against real attackers) for Microsoft’s Windows and Devices Group (WDG), now part of the Cloud and AI (C+AI) organization. He writes toolsmith, a monthly column for information security practitioners, and has written for other publications including Information Security, (IN)SECURE, SysAdmin, and Linux Magazine. The data Russ routinely deals with is massive in scale: He processes security event telemetry of all types (operating systems, network, applications, service layer) for all of Windows, Xbox, the Universal Store (transactions/purchases), and a few others. Billions of events in short order.

Learning Trajectory

This is a great article from master in information security, Russ McCree. You’ll learn how Russ is using our new package for time series anomaly detection, anomalize, within his Blue Team (internal thread-defending team) work at Microsoft. He provides real-world examples of “threat hunting”, or the act of identifying malicious attacks on servers and how anomalize can help to algorithmically identify threats. Specifically, Russ shows you how to detect anomalies in security event logs as shown below.

Threat Hunting With Anomalize

By Russ McCree, Group Program Manager of Microsoft’s Windows and Devices Group

When, in October and November’s toolsmith posts, I (Russ McRee) redefined DFIR under the premise of Deeper Functionality for Investigators in R, I discovered a “tip of the iceberg” scenario. To that end, I’d like to revisit the DFIR concept with an additional discovery and opportunity. In reality, this is really a case of Deeper Functionality for Investigators in R within the original and paramount activity of Digital Forensics/Incident Response (DFIR).

Massive Data Requires Algorithmic Methods

Those of us in the DFIR practice, and Blue Teaming at large, are overwhelmed by data and scale. Success truly requires algorithmic methods. If you’re not already invested here I have an immediately applicable case study for you in tidy anomaly detection with anomalize.

First, let me give credit where entirely due for the work that follows. Everything I discuss and provide is immediately derivative from Business Science (@bizScienc), specifically Matt Dancho (@mdancho84). When a client asked Business Science to build an open source anomaly detection algorithm that suited their needs, he created anomalize:

“a tidy anomaly detection algorithm that’s time-based (built on top of

tibbletime) and scalable from one to many time series,”

I’d say he responded beautifully.

Anomalizing in InfoSec: Threat Hunting At Scale

When his blogpost introducing anomalize hit my (Russ’s) radar via R-Bloggers it lived as an open tab in my browser for more than a month until generating this toolsmith article. Please consider Matt’s post a mandatory read as step one of the process here. I’ll quote Matt specifically before shifting context:

“Our client had a challenging problem: detecting anomalies in time series on daily or weekly data at scale. Anomalies indicate exceptional events, which could be increased web traffic in the marketing domain or a malfunctioning server in the IT domain. Regardless, it’s important to flag these unusual occurrences to ensure the business is running smoothly. One of the challenges was that the client deals with not one time series but thousands that need to be analyzed for these extreme events.”

-Matt Dancho, creator of

anomalize

Key takeaway: Detecting anomalies in time series on daily or weekly data at scale. Anomalies indicate exceptional events.

Now shift context with me to security-specific events and incidents, as they pertain to security monitoring, incident response, and threat hunting. In my November 2017 post on redefining DFIR, I discussed Time Series Regression (TSR) with the Holt-Winters method and a focus on seasonality and trends. Unfortunately, I couldn’t share the code for how we applied TSR, but pointed out alternate methods, including Seasonal and Trend Decomposition using Loess (STL), which:

- Handles any type of seasonality (which can change over time)

- Automatically smooths the trend-cycle (this can also be controlled by the user)

- Is robust to outliers (high leverage points that can shift the mean)

Here now, Matt has created a means to immediately apply the STL method, along with the Twitter method (reference page), as part of his time_decompose() function, one of three functions specific to the anomalize package. The anomalize package includes the following main functions:

-

time_decompose(): Separates the time series into seasonal, trend, and remainder components. The methods used including STL and Twitter are described in Matt’s reference page. -

anomalize(): Applies anomaly detection methods to the remainder component. The methods used including IQR and GESD are described in Matt’s reference page. -

time_recompose(): Calculates limits that separate the “normal” data from the anomalies.

Matt ultimately set out to build a scalable adaptation of Twitter’s AnomalyDetection package in order to address his client’s challenges in dealing with not one time series but thousands needing to be analyzed for extreme events. You’ll note that Matt describes anomalize using a dataset (tidyverse_cran_downloads that ships with anomalize) that contains the daily download counts of the 15 tidyverse packages from CRAN, relevant as he leverages the tidyverse package.

I initially toyed with tweaking Matt’s demo to model downloads for security-specific R packages (yes, there are such things) from CRAN, including:

The latter two packages are courtesy of Bob Rudis of our beloved Data-Driven Security: Analysis, Visualization and Dashboards. Alas, this was a mere rip and replace, and really didn’t exhibit the use of anomalize in a deserving, varied, truly security-specific context. That said, I was able to generate immediate results doing so, as seen in Figure 1: Security R Anomalies.

As an initial experiment you can replace packages names with those of your choosing in tidyverse_cran_downloads, run it in R Studio, then tweak variable names and labels in the code per Matt’s README page.

Code Tutorial: Anomalizing A Real Security Scenario

I wanted to run anomalize against a real security data scenario, so I went back to the dataset from the original DFIR articles where I’d utilized counts of 4624 Event IDs per day, per user, on a given set of servers. As utilized originally, I’d represented results specific to only one device and user, but herein is the beauty of anomalize. We can achieve quick results across multiple times series (multiple systems/users). This premise is but one of many where time series analysis and seasonality can be applied to security data.

For those that would like to follow along, load the following libraries.

library(tidyverse)

library(tibbletime)

library(anomalize)I originally tried to write log data from log.csv straight to an anomalize.R script with logs = read_csv("log.csv") into a tibble (ready your troubles with tibbles jokes), which was not being parsed accurately, particularly time attributes. To correct this, from Matt’s GitHub I grabbed tidyverse_cran_downloads.R, and modified it as follows:

# Path to security log data

logs_path <- "https://raw.githubusercontent.com/holisticinfosec/toolsmith_R/master/anomalize/log.csv"

# Group by server, Convert to tibbletime

security_access_logs <- read_csv(logs_path) %>%

group_by(server) %>%

as_tbl_time(date)

security_access_logs## # A time tibble: 198 x 3

## # Index: date

## # Groups: server [3]

## date count server

## <date> <int> <chr>

## 1 2017-05-22 7 SERVER-549521

## 2 2017-05-23 9 SERVER-549521

## 3 2017-05-24 12 SERVER-549521

## 4 2017-05-25 4 SERVER-549521

## 5 2017-05-26 4 SERVER-549521

## 6 2017-05-30 2 SERVER-549521

## 7 2017-05-31 10 SERVER-549521

## 8 2017-06-01 14 SERVER-549521

## 9 2017-06-02 12 SERVER-549521

## 10 2017-06-05 7 SERVER-549521

## # ... with 188 more rowsThis helped greatly thanks to the tibbletime package, which “is an extension that allows for the creation of time aware tibbles”. Some immediate advantages of this include: the ability to perform time-based subsetting on tibbles, quickly summarising and aggregating results by time periods. Guess what, Matt’s colleague, Davis Vaughan, is the one who wrote tibbletime too. 🙂

I then followed Matt’s sequence as he posted on Business Science, but with my logs defined as a function in Security_Access_Logs_Function.R. Following, I’ll give you the code snippets, as revised from Matt’s examples, followed by their respective results specific to processing my Event ID 4624 daily count log.

First, let’s summarize daily logon counts across three servers over four months.

The result is evident in Figure 2: Server Logon Counts.

# plot login counts across 3 servers

security_access_logs %>%

ggplot(aes(date, count)) +

geom_point(color = "#2c3e50", alpha = 0.5) +

facet_wrap(~ server, scale = "free_y", ncol = 3) +

theme_minimal() +

theme(axis.text.x = element_text(angle = 30, hjust = 1)) +

labs(title = "Figure 2: Server Logon Counts",

subtitle = "Data from Security Event Logs, Event ID 4624")

Next, let’s determine which daily download logons are anomalous with Matt’s three main functions, time_decompose(), anomalize(), and time_recompose(), along with the visualization function, plot_anomalies(), across the same three servers over four months. The result is revealed in Figure 3: Security Event Log Anomalies.

# Detect and plot security event log anomalies

security_access_logs %>%

# Data Manipulation / Anomaly Detection

time_decompose(count, method = "stl") %>%

anomalize(remainder, method = "iqr") %>%

time_recompose() %>%

# Anomaly Visualization

plot_anomalies(time_recomposed = TRUE, ncol = 3, alpha_dots = 0.25) +

labs(title = "Figure 3: Security Event Log Anomalies", subtitle = "STL + IQR Methods")

Next, we can compare method combinations for the time_decompose() and anomalize() methods:

These are two different decomposition and anomaly detection approaches.

Twitter + GESD:

Following Matt’s method using Twitter’s AnomalyDetection package, combining time_decompose(method = "twitter") with anomalize(method = "gesd"), while adjusting the trend = "4 months" to adjust median spans, we’ll focus only on SERVER-549521. In Figure 4: SERVER-549521 Anomalies, Twitter + GESD, you’ll note that there are anomalous logon counts on SERVER-549521 in June.

# Get only SERVER549521 access

SERVER549521 <- security_access_logs %>%

filter(server == "SERVER-549521") %>%

ungroup()

# Anomalize using Twitter + GESD

SERVER549521 %>%

# Twitter + GESD

time_decompose(count, method = "twitter", trend = "4 months") %>%

anomalize(remainder, method = "gesd") %>%

time_recompose() %>%

# Anomaly Visualziation

plot_anomalies(time_recomposed = TRUE) +

labs(title = "Figure 4: SERVER-549521 Anomalies", subtitle = "Twitter + GESD Methods")

STL + IQR:

Again, we note anomalies in June, as seen in Figure 5: STL + IQR Methods. Obviously, the results are quite similar, as one would hope.

# STL + IQR

SERVER549521 %>%

# STL + IQR Anomaly Detection

time_decompose(count, method = "stl", trend = "4 months") %>%

anomalize(remainder, method = "iqr") %>%

time_recompose() %>%

# Anomaly Visualization

plot_anomalies(time_recomposed = TRUE) +

labs(title = "Figure 5: SERVER-549521 Anomalies",

subtitle = "STL + IQR Methods")

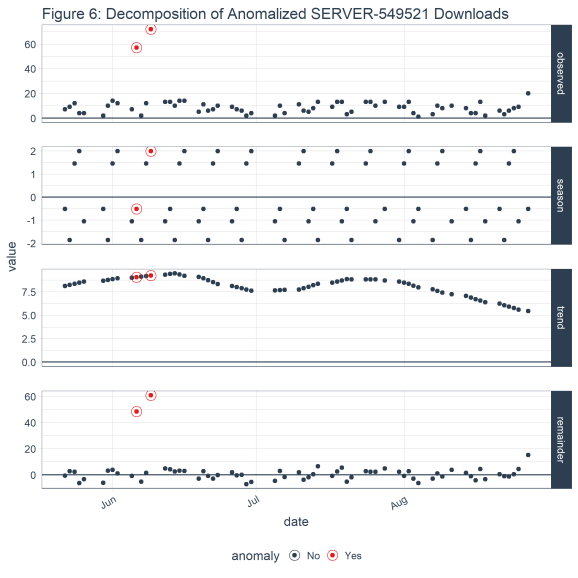

Finally, let use Matt’s plot_anomaly_decomposition() for visualizing the inner workings of how algorithm detects anomalies in the remainder for SERVER-549521. The result is a four part visualization, including observed, season, trend, and remainder as seen in Figure 6.

# Created from Anomalize project, Matt Dancho

# https://github.com/business-science/anomalize

SERVER549521 %>%

time_decompose(count) %>%

anomalize(remainder) %>%

plot_anomaly_decomposition() +

labs(title = "Figure 6: Decomposition of Anomalized SERVER-549521 Downloads")

Future Work In InfoSec: Anomalize At A Larger Scale

I’m really looking forward to putting these methods to use at a much larger scale, across a far broader event log dataset. I firmly assert that Blue Teams are already way behind in combating automated adversary tactics and problems of sheer scale, so…much…data. It’s only with tactics such as Matt’s anomalize package, and others of its ilk, that defenders can hope to succeed. Be sure to watch Matt’s YouTube video on anomalize. Business Science is building a series of videos in addition, so keep an eye out there and on their GitHub for more great work that we can apply a blue team/defender’s context to.

All the code snippets are in my GitHubGist, and the sample log file, a single R script, and a Jupyter Notebook are all available for you on my GitHub under toolsmith_r. I hope you find anomalize as exciting and useful as I have. Great work by Matt, looking forward to see what’s next from Business Science.

Cheers… until next time.

About The Author

Russ McRee is Group Program Manager of the Blue Team for Microsoft’s Windows and Devices Group (WDG), now part of the Cloud and AI (C+AI) organization. He writes toolsmith, a monthly column for information security practitioners, and has written for other publications including Information Security, (IN)SECURE, SysAdmin, and Linux Magazine.

Russ has spoken at events such as DEFCON, Derby Con, BlueHat, Black Hat, SANSFIRE, RSA, and is a SANS Internet Storm Center handler. He serves as a joint forces operator and planner on behalf of Washington Military Department’s cyber and emergency management missions. Russ advocates for a holistic approach to the practice of information assurance as represented by holisticinfosec.org.

Business Science University

If you are looking to take the next step and learn Data Science For Business (DS4B), Business Science University is for you! Our goal is to empower data scientists through teaching the tools and techniques we implement every day. You’ll learn:

- Data Science Framework: Business Science Problem Framework

- Tidy Eval

- H2O Automated Machine Learning

- LIME Feature Explanations

- Sensitivity Analysis

- Tying data science to financial improvement

All while solving a REAL WORLD CHURN PROBLEM: Employee Turnover!

Special Autographed “Deep Learning With R” Giveaway!!!

One lucky student that enrolls in June will receive an autographed copy of Deep Learning With R, signed by JJ Allaire, Founder of Rstudio and DLwR co-author.

DS4B Virtual Workshop: Predicting Employee Attrition

Did you know that an organization that loses 200 high performing employees per year is essentially losing $15M/year in lost productivity? Many organizations don’t realize this because it’s an indirect cost. It goes unnoticed. What if you could use data science to predict and explain turnover in a way that managers could make better decisions and executives would see results? You will learn the tools to do so in our Virtual Workshop. Here’s an example of a Shiny app you will create.

Shiny App That Predicts Attrition and Recommends Management Strategies, Taught in HR 301

Our first Data Science For Business Virtual Workshop teaches you how to solve this employee attrition problem in four courses that are fully integrated:

- HR 201: Predicting Employee Attrition with

h2oandlime - HR 301 (Coming Soon): Building A

ShinyWeb Application - HR 302 (EST Q4): Data Story Telling With

RMarkdownReports and Presentations - HR 303 (EST Q4): Building An R Package For Your Organization,

tidyattrition

The Virtual Workshop is intended for intermediate and advanced R users. It’s code intensive (like these articles), but also teaches you fundamentals of data science consulting including CRISP-DM and the Business Science Problem Framework. The content bridges the gap between data science and the business, making you even more effective and improving your organization in the process.

Don’t Miss A Beat

- Sign up for the Business Science blog to stay updated

- Enroll in Business Science University to learn how to solve real-world data science problems from Business Science

- Check out our Open Source Software

Connect With Business Science

If you like our software (anomalize, tidyquant, tibbletime, timetk, and sweep), our courses, and our company, you can connect with us:

- business-science on GitHub

- Business Science, LLC on LinkedIn

- bizScienc on twitter

- Business Science, LLC on Facebook

R-bloggers.com offers daily e-mail updates about R news and tutorials about learning R and many other topics. Click here if you're looking to post or find an R/data-science job.

Want to share your content on R-bloggers? click here if you have a blog, or here if you don't.