Streamline Your Mechanical Turk Workflow with MTurkR

Want to share your content on R-bloggers? click here if you have a blog, or here if you don't.

I’ve been using Thomas Leeper‘s MTurkR package to administer my most recent Mechanical Turk study—an extension of work on representative-constituent communication claiming credit for pork benefits, with Justin Grimmer and Sean Westwood. MTurkR is excellent, making it quick and easy to:

- test a project in your MTurk sandbox

- deploy a project live

- download the completed HIT data from Amazon

- issue credit to workers after you run your own validation checks in R

- issue bonuses en-masse

- all programmatically via the API.

In this post I’ll walk you through the set-up process and a couple of basic examples. But first a few words to motivate your interest in Mechanical Turk.

On Mechanical Turk and Social Science

Mechanical Turk has proven to be a boon for social science research. I discussed how it’s a great way attain data based on human judgements (e.g., content analysis, coding images, etc.) in my last post on creating labels for supervised text classification.

It’s also a great source of experimental subjects in a field often plagued by small samples, which have lower power and higher false discovery rates (especially when researchers exploit “undisclosed flexibility” in their design and analysis). Mechanical Turk provides a large, geographically and demographically diverse sample of participants, freeing researchers from confines of undergraduate samples, which can respond differently than other key populations.

Mechanical Turk also provides a much lower-cost experimental platform. Compared to a national survey research firm, the cost per subject is dramatically lower, and most survey firms simply do not allow the same design flexibility, generally limiting you to question-wording manipulations. Mechanical Turk is also cheaper than running a lab study in terms of the researcher’s time, or that of her graduate students. This is true even for web studies—just tracking undergraduate participants and figuring out who should get course credit is a mind-numbing, yet error-prone and so frustrating process. Mechanical Turk makes it easy for the experimenter to manage data collection, perform validation checks, and quickly disburse payment to subjects who actually participated. I’ll show you how to streamline this process to automate as much as possible in the next section.

But are results from Mechanical Turk valid and will I be able to publish on Mturk data? Evidence that the answer is “yes” accumulates. Berinksy, Huber, and Lenz (2012) published a validation study in Political Analysis that replicates important published experimental work using the platform. Furthermore, Justin Grimmer, Sean Westwood and I published a study in the American Political Science Review on how people respond when congressional representatives announce political pork in which we provide extensive Mechanical Turk validation in the online appendix. We provide demographic comparisons to the U.S. Census, show the regional distribution of Turkers by state and congressional district, and show that correlations between political variables are about the same as in national survey samples. Sean and I also published a piece in Communication Research on how social cues drive the news we consume which among other things replicates past findings on partisans’ preferences for news with a partisan slant.

But are Turkers paying enough attention to actually process my stimuli? It’s hard to know for sure, but there are an array of questions one can deploy that are designed to make sure Turkers are paying attention. When my group runs Mturk studies, we typically ask a few no-brainer questions to qualify at the beginning of any task (e.g., 5 + 7 = 13, True or False?), then burry some specific instructions in a paragraph of text toward the end (e.g., bla bla bla, write “I love Mechanical Turk” in the box asking if you have any comments).

But we are paying Turkers, doesn’t that make them more inclined toward demand effects? Well, compared to what? Compared to a student who needs class credit or a paid subject? On the contrary, running a study on the web ensures that researchers in a lab do not provide non-verbal cues (of which they are unaware) that can give rise to demand effects. Of course, in any experimental setting it’s important to think carefully about potential demand effects, and especially so when deploying noticeable/obvious manipulations, and or when you have a within-subject design such that respondents observe multiple conditions.

Set-Up

Install the most recent version of MTurkR from Tom’s github repo. You can use Hadley’s excellent devtools package to install it:

# install.packages("devtools")

library(devtools)

install_github(repo="MTurkR",

username = "leeper")

If you don’t have a Mechanical Turk account, get one. Next you want to set up your account, fund it, etc., there are a lot of good walkthroughs just google it if you have trouble.

Also, set up your sandbox so you can test things before you actually spend real money.

You’ll also need to get your AWS Access Key ID and AWS Secret Access Key to get access to the API. Click on “Show” under “Secret Access Key” to actually see it.

I believe you can use the MTurkR package to design a questionnaire and handle randomization, but that’s beyond the scope of this post—for now just create a project as you normally would via the GUI.

Examples

First, make sure your credentials work correctly and check your account balance (replace the dummy credentials with your own):

require("MTurkR")

credentials(c("AKIAXFL6UDOMAXXIHBQZ","oKPPL/ySX8M7RIXquzUcuyAZ8EpksZXmuHLSAZym"))

AccountBalance()

Great, now R is talking to the API. Now you can actually create HITs. Let’s start in your sandbox. Create a project in your sandbox, or just copy a project you’ve already created—copy the html in the design layout screen from your production account to your sandbox account.

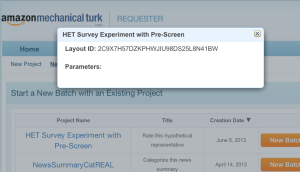

Now you need to know the HIT layout id. Make sure you are in the “Create” tab in the requester interface, and click on your Project Name. This window will pop up:

Copy and paste the Layout ID in your R script, you’ll use it in a second. With the Layout ID in hand, you can create HITs based on this layout. Let’s create a HIT in our sandbox first. We’ll first set the qualifications that Mechanical Turkers must meet, then tell the API to create a new HIT in the sandbox with 1200 assignments for $.50 each. Modify the parameters below to fit your needs.

# First set qualifications # ListQualificationTypes() to see different qual types qualReqs = paste( # Set Location to US only GenerateQualificationRequirement( "Location","==","US"), # Worker_PercentAssignmentsApproved GenerateQualificationRequirement( "000000000000000000L0", ">", "80", qual.number=2), # Un-comment after sandbox test # Worker_NumberHITsApproved # GenerateQualificationRequirement( # "00000000000000000040", ">", "100", # qual.number=3), sep="" ) # Create new batch of hits: newHIT = CreateHIT( # layoutid in sandbox: hitlayoutid="22P2J1LY58B74P14WC6KKD16YGOR6N", sandbox=T, # layoutid in production: # hitlayoutid="2C9X7H57DZKPHWJIU98DS25L8N41BW", annotation = "HET Experiment with Pre-Screen", assignments = "1200", title="Rate this hypothetical representative", description="It's easy, just rate this hypothetical representative on how well she delivers funds to his district", reward=".50", duration=seconds(hours=4), expiration=seconds(days=7), keywords="survey, question, answers, research, politics, opinion", auto.approval.delay=seconds(days=15), qual.reqs=qualReqs )

To check to make sure everything worked as intended, go to your worker sandbox and search for the HIT you just created. See it? Great.

Here’s how to check on the status of your HIT:

# Get HITId (record result below) newHIT$HITId # "2C2CJ011K274LPOO4SX1EN488TRCAG" HITStatus(hit="2C2CJ011K274LPOO4SX1EN488TRCAG")

And now here’s where the really awesome and time-saving bit comes in, downloading the most recent results. If you don’t use the API, you have to do this manually from the website GUI, which is a pain. Here’s the code:

review = GetAssignments(hit="2C2CJ011K274LPOO4SX1EN488TRCAG", status="Submitted", return.all=T)

Now you probably want to actually run your study with real subjects. You can copy your project design HTML back to the production site and repeat the above for production when you are ready to launch your HIT.

Often, Turkers will notice something about your study and point it out, hoping that it will prove useful and you will grant them a bonus. If they mention something helpful, grant them a bonus! Here’s how (replace dummy workerid with the id for the worker to whom you wish to grant a bonus):

## Grant bonus to worker who provided helpful comment: bonus assignments=review$AssignmentId[review$WorkerId=="A2VDVPRPXV3N59"], amounts="1.00", reasons="Thanks for the feedback!")

You’ll also save time when you go to validate and approve HITs, which MTurkR allows you to do from R. My group usually uses Qualtrics for questionnaires that accompany our studies where we build in the attention check described above. Here’s how to automate the attention check and approve HITs for those who passed:

svdat = read.csv(unzip("data/Het_Survey_Experiment.zip"), skip=1, as.is=T)

approv = agrep("I love Mechanical Turk", max.distance=.3,

svdat$Any.other.comments.or.questions.)

svdat$Any.other.comments.or.questions.[approv]

correct = review$AssignmentId[

gsub(" ", "", review$confcode) %in% svdat$GUID[approv] ]

# To approve:

approve = approve(assignments=correct)

Note that “agrep()” is an approximate matching function which I use in case Turkers add quotes or other miscellaneous text that shouldn’t disqualify their work.

But that’s not all–suppose we want to check the average time per HIT (to make sure we are paying Turkers enough) and/or start analyzing our data—we can do this via the API rather than downloading things from the web with MTurkR.

# check average time: review = GetAssignments(hit="2C2CJ011K274LPOO4SX1EN488TRCAG", status="Approved", return.all=T) # Check distribution of time each Turker takes: quantile(review$SecondsOnHIT/60)

If we discover we aren’t paying Turkers enough in light of how long they spend on the HIT, which in addition to being morally wrong means that we will probably not collect a sufficient number of responses in a timely fashion, MTurkR allows us to quickly remedy the situation. We can expire the HIT, grant bonuses to those who completed the low-pay HIT, and relaunch. Here’s how:

# Low pay hits:

HITStatus(hit="2JV21O3W5XH0L74WWYBYKPLU3XABH0")

# Review remaining HITS and approve:

review = GetAssignments(hit="2JV21O3W5XH0L74WWYBYKPLU3XABH0",

status="Submitted", return.all=T)

correct = review$AssignmentId[ which( gsub(" ", "", review$confcode) %in% svdat$GUID[approv] ) ]

approve = approve(assignments=correct)

# Expire remaining:

ExpireHIT(hit="2JV21O3W5XH0L74WWYBYKPLU3XABH0")

approvedLowMoneyHits = GetAssignments(hit="2JV21O3W5XH0L74WWYBYKPLU3XABH0",

status="Approved", return.all=T)

# Grant bonus to workers who completed hit already:

bonus = GrantBonus(workers=approvedLowMoneyHits$WorkerId,

assignments=approvedLowMoneyHits$AssignmentId,

amounts="1.00",

reasons="Upping the pay for this HIT, thanks for completing it!")

We can now run the administrative side of our Mechanical Turk HITs from R directly. It’s a much more efficient workflow.

R-bloggers.com offers daily e-mail updates about R news and tutorials about learning R and many other topics. Click here if you're looking to post or find an R/data-science job.

Want to share your content on R-bloggers? click here if you have a blog, or here if you don't.