Launch Apache Spark on AWS EC2 and Initialize SparkR Using RStudio

Want to share your content on R-bloggers? click here if you have a blog, or here if you don't.

This post was first published on SparkIQ Labs’ blog and re-posted on my personal blog.

Introduction

In this blog post, we shall learn how to launch a Spark stand alone cluster on Amazon Web Services (AWS) Elastic Compute Cloud (EC2) for analysis of Big Data. This is a continuation from our previous blog, which showed us how to download Apache Spark and start SparkR locally on windows OS and RStudio.

We shall use Spark 1.5.1 (released on October 02, 2015) which has a spark-ec2 script that is used to install stand alone Spark on AWS EC2. A nice feature about this spark-ec2 script is that it installs RStudio server as well. This means that you don’t need to install RStudio server separately. Thus you can start working with your data immediately after Spark is installed.

Prerequisites

- You should have already downloaded Apache Spark onto your local desktop from the official site. You can find instructions on how to do so in our previous post.

- You should have an AWS account, created secret access key(s) and downloaded your private key pair as a .pem file. Find instructions on how to create your access keys here and to download your private keys here.

- We will launch the clusters through Bash shell on Linux. If you are using Windows OS I recommend that you install and use the Cygwin terminal (It provides functionality similar to a Linux distribution on Windows)

Launching Apache Spark on AWS EC2

We shall use the spark-ec2 script, located in Spark’s ec2 directory to launch, manage and shutdown Spark clusters on Amazon EC2. It will setup Spark, HDFS, Tachyon, RStudio on your cluster.

Step 1: Go into the ec2 directory

Change directory into the “ec2″ directory. In my case, I downloaded Spark onto my desktop, so I ran this command.

Step 2: Set environment variables

Set the environment variables AWS_ACCESS_KEY_ID and AWS_SECRET_ACCESS_KEY to your Amazon EC2 access key ID and secret access key.

Step 3: Launch the spark-ec2 script

Launch the cluster by running the following command.

Where;

- –key-pair=

, The name of your EC2 key pair - –identity-file=

.pem , The private key file - –region=

- –instance-type=

- -s N, where N is the number of slave nodes

- “test-cluster” is the name of the cluster

In case you want to set other options for the launch of your cluster, further instructions can be found on the Spark documentation website.

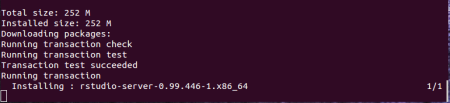

As I mentioned earlier, this script also installs RStudio server, as can be seen in the figure below.

The cluster installation takes about 7 minutes. When it is done, the host address of the master node is displayed at the end of the log message as shown in the figure below. At this point your Spark cluster has been installed successfully and you are a ready to start exploring and analyzing your data.

Before you continue, you may be curious to see whether your cluster is actually up and running. Simply log into your AWS account and go to the EC2 dashboard. In my case, I have 1 master node and 2 slave/worker nodes in my Spark cluster.

Use the address displayed at the end of the launch message and access the Spark User Interface (UI) on port 8080. You can also get the host address of your master node by using the “get-master” option in the command below.

$ ./spark-ec2 --key-pair=awskey --identity-file=awskey.pem get-master test-cluster

Step 4: Login to your cluster

In the terminal you can login to your master node by using the “login” option in the following command

$ ./spark-ec2 --key-pair=awskey --identity-file=awskey.pem login test-cluster

Step 5 (Optional): Start the SparkR REPL

Here you can actually start the SparkR REPL by typing the following command.

$ spark/bin/sparkR

SparkR will be initialized and you should see a welcome message as shown in the Figure below. Here you can actually start working with your data. However most R users, like myself, would like to work in an Integrated Development Environment (IDE) like RStudio. See steps 6 and 7 on how to do so.

Step 6: Create user accounts

Use the following command to list all available users on the cluster.

$ cut -d: -f1 /etc/passwd

You will notice that “rstudio” is one of the available user accounts. You can create other user accounts and passwords for them using these commands.

$ sudo adduser daniel

$ passwd daniel

In my case, I used the “rstudio” user account and changed its password.

Initializing SparkR Using RStudio

The spark-ec2 script also created a “startSpark.R” script that we shall use to initialize SparkR.

Step 7: Login to RStudio server

Using the username you selected/created and the password you created, login into RStudio server.

Step 8: Initialize SparkR

When you log in to RStudio server, you will see the “startSpark.R” in your files pane (already created for you).

Simply run the “startSpark.R” script to initialize SparkR. This creates a Spark Context and a SQL Context for you.

Step 9: Start Working with your Data

Now you are ready to start working with your data.

Here I use a simple example of the “mtcars” dataset to show that you can now run SparkR commands and use the MLLib library to run a simple linear regression model.

You can view the status of your jobs by using the host address of your master and listening on port 4040. This UI also displays a chain of RDD dependencies organized in Direct Acyclic Graph (DAG) as shown in the figure below.

Final Remarks

The objective of this blog post was to show you how to get started with Spark on AWS EC2 and initialize SparkR using RStudio. In the next blog post we shall look into working with actual “Big” datasets stored in different data stores such as Amazon S3 or MongoDB.

Further Interests: RStudio Shiny + SparkR

I am curious about how to use Shiny with SparkR and in the next couple of days I will investigate this idea further. The question is: how can one use SparkR to power shiny applications. If you have any thoughts please share them in the comments section below and let’s discuss.

R-bloggers.com offers daily e-mail updates about R news and tutorials about learning R and many other topics. Click here if you're looking to post or find an R/data-science job.

Want to share your content on R-bloggers? click here if you have a blog, or here if you don't.