Web Scraping: The Sequel | Propwall.my

Want to share your content on R-bloggers? click here if you have a blog, or here if you don't.

Alright. Time to take another shot at web scraping. My attempt at scraping data off iBilik.my left me a little frustrated because of how long it took, and also at how i couldn’t get much information because of all the duplicated posts.

I think Propwall.my would be a much better choice considering how their listings are arranged. Propwall is:

…Malaysia’s most ADVANCED property search website that provides marketing and research solutions to property agents, developers, and investors.

The emphasis on “advanced” was not mine. They just seem to be pretty sure of themselves.

I figured that since i have some idea of how to do this now, it would be a lot easier to organize my code in a way that’s more neat. Well…for me at least. And plus, i could insert more comments this time.

I picked Mont Kiara as my location and I started off the same way, with loading the stringr, rvest, and ggvis packages.

#load libraries library(stringr) library(rvest) library(ggvis) library(dplyr) library(ggplot2) #The first half of the URL.. site_first = "http://www.propwall.my/mont_kiara/classifieds?page=" #...and this is the second half of the URL site_second = "&tab=Most%20Relevance&keywords=Mont%20Kiara%2C%20Kuala%20Lumpur&filter_id=17&filter_type=Location&listing=For%20Rent&view=list" #concatenate them together, with the coerced digit in between them. This digit is the page number siteCom = paste(site_first, as.character(1), site_second, sep = "") siteLocHTML = html(siteCom)

You might be wondering why i keep loading ggvis if i won’t be using their charts. Embarrassingly enough, it’s because i keep forgetting which package allows me to use the piping operator “%>%”, but i always remember that i can use that operator if ggvis is loaded.

Similar to what i did in the iBilik.my post, i extracted the information for the first search page, and then rbind-ed the remaining pages; as so:

#Extract the descriptions of the first page...

siteLocHTML %>% html_nodes("h4.media-heading a") %>%

html_text() %>% data.frame() -> x

#...and also the links to these postings

siteLocHTML %>% html_nodes("#list-content") %>%

html_nodes(".media") %>%

html_nodes(".media-heading") %>%

html_nodes("a") %>%

html_attr("href") %>%

data.frame() -> y

#Since we already have the extractions for the first page, so no we...

#...can loop through numbers 2 to 250 and rbind them with page 1 extracts

for(i in 2:250){

siteCom = paste(site_first, as.character(i), site_second, sep = "")

siteLocHTML = html(siteCom)

siteLocHTML %>% html_nodes("h4.media-heading a") %>%

html_text() %>% data.frame() -> x_next

siteLocHTML %>% html_nodes("#list-content") %>%

html_nodes(".media") %>%

html_nodes(".media-heading") %>%

html_nodes("a") %>%

html_attr("href") %>%

data.frame() -> y_next

x = rbind(x, x_next)

y = rbind(y, y_next)

}

#column bind the description and links

complete = cbind(x,y)

complete[,2] = as.character(complete[,2])

names(complete) = c("Description", "Link")

#file backup

write.csv(complete, "complete_propwall.csv", row.names = FALSE)

#And remove the remaining dataframes from the environment

rm(x_next, y_next, x, y)

Up to this point, the code has sifted through 250 search pages, with 20 listings each; which adds up to 5000 listings. However, i only have the links to the postings, and not the information such as rental, layout, etc. In order for me to have that information, i would need to navigate to each link and get the rental, layout, and date…for 5000 posts.

There was no means with which i could determine if a post was duplicated or not, so i haven’t removed any of the 5000 posts. The only observations that were removed were based on unavailability of certain information (no rental info given) or the ridiculousness of a rental rate (RM 1 million rental! Probably meant for the sale section and not the rental section).

Anywho, the next step was to get the post information, and i used the code below:

for(i in 1:nrow(complete)){

siteLocHTML = html(complete[i,"Link"])

siteLocHTML %>% html_nodes(".clearfix") %>%

html_nodes("#price") %>% html_text() %>% c() -> y

#Rentals come out in quadruples, but i need only one

y = unique(y)

price = c(price, y)

siteLocHTML %>% html_nodes("#content") %>%

html_nodes("p") %>% html_text() -> z

dates = c(dates, z)

siteLocHTML %>% html_nodes(".clearfix") %>%

html_nodes("td") %>% html_text() %>% c() -> a

b = a[8]

a = a[4]

furnish = c(furnish, a)

layout = c(layout, b)

}

#take only the first 4922 rows

final = complete[1:4922,]

#cbind the new columns

final$price = price

final$furnish = furnish[1:4922]

final$layout = layout[1:4922]

final$posted = dates[1:4922]

#remove NAs

final_fil = na.omit(final)

#remove other NAs as shown in the website

final_fil = final_fil[!final_fil[,4] == "-NA-",]

final_fil = final_fil[!final_fil[,5] == "-NA-",]

#remove rownames

rownames(final_fil) = NULL

final_fil$furnish = as.factor(final_fil$furnish)

final_fil$layout = as.factor(final_fil$layout)

rm(y,z,a,b,price, furnish, layout, dates)

I should mention that i had run with some issues in finishing the data extraction up to this point. The issue mainly had to do with the fact after 4922 posts, i got an error. The error had more to do with navigating to so many pages than the code itself. With that in mind, i had to limit my sample to 4922 observations.

Next up, a little string manipulation to get the rental rates in a format more acceptable for calculations. I also need to get only the dates from the posting information. I changed the original formatting for rentals and dates…

> "RM 5,200 (RM 3 psf)"

> "Posted by Champion YEE 016-6012080 on 02/11/2015"

…into this…

> 5200

> 02/11/2015

and the code i used was the following script:

#Extracting dates and rentals

x = c()

for(i in 1:nrow(final_fil)){

x[i] = substring(final_fil[i, "posted"], nchar(final_fil[i, "posted"])-9, nchar(final_fil[i, "posted"]))

}

rm(complete, final,a,b,furnish, i, layout, price)

final_fil$date = x

x = c()

for(i in 1:nrow(final_fil)){

y = gregexpr("\\(",final_fil[i,"price"])[[1]][1]

x[i] = substring(final_fil[i,"price"], 4, y-2)

}

z

x = as.integer(str_replace_all(x, ",", ""))

final_fil$rental = x

And last but not least, the name of the residency. I already have the names of the residency written as, for instance, Vista Kiara, Mont Kiara”. What i didn’t want was the “Mont Kiara” bit, and so i added another column “residency” that holds only the names:

#Extract only the name of the residency final_fil$residency = str_replace_all(final_fil[,1], ", Mont Kiara", "")

Anyone who uses R will tell you that dplyr is awesome. It’s just….just sooo nice. Although i’m still trying to remember everything it can do, what follows is perhaps a very good example of dplyr’s awesomeness.

Since i now have the residency name, the rentals, and the layouts; i can produce a single dataframe that shows the average rental for each layout, for each residency….and all that with one line of dplyr magic:

Mkiara_data = data.frame(summarise(group_by(final_fil, residency, layout), Average_Rental = mean(rental)))

And so if we take a look at the last 6 rows of this data frame, you’ll see:

tail(Mkiara_data)

residency layout Average_Rental

162 i-Zen Kiara I Studio 9000.000

163 i-Zen Kiara II 1-Bedroom 6165.714

164 i-Zen Kiara II 2-Bedroom 3600.000

165 i-Zen Kiara II 3-Bedroom 5450.000

166 i-Zen Kiara II 4-Bedroom 5943.750

167 i-Zen Kiara II 5-Bedroom 6668.7507

Hadley Wickham should win an award just for developing that.

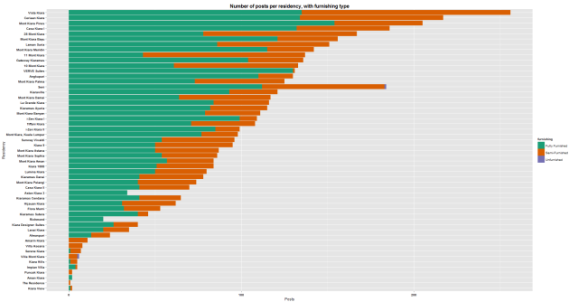

Now time to take a look at the data by looking at some graphs. Using the final_fil dataframe, a table can be made that can count the number of times the residency name appears and i can use that data to plot a graph.

#Number of posts per residency

x = data.frame(table(final_fil$residency))

names(x) = c("Residency", "Number_of_Posts")

ggplot(x, aes(x=reorder(Residency,Number_of_Posts), y=Number_of_Posts)) +

geom_bar(stat="identity", color="white") +

xlab("Residency") + ylab("Number of posts") + coord_flip() +

ggtitle("Number of posts per residency") +

theme(plot.title=element_text(size=16, face = "bold", color = "Red"))

The resulting graph will look like this:

(You can click on the image to get a better look at the chart)

I would like to think that the higher the number of posts, the more unpopular the residency is. Obviously, there are some arguments against that, but using that understanding we can see that there are very few posts for places such as The Residence and Aman Kiara, while places such as Vista Kiara and Ceriaan Kiara have seen a lot of rentals available. I’m no property guy, but i’m guessing there are plenty of more factors at play here such as when was the property launched, the type of residency (condo, house, etc), the neighborhood (metro, suburban), and so on and so forth. I’ll let you figure that one out.

Perhaps we need some more information, such at the proportions of these listings that are categorized under what level of furnishing.

#Create table for residency and furnishing type

z = data.frame(table(final_fil[,c("residency", "furnish")]))

z = z[z[,"Freq"] != 0,]

names(z) = c("residency", "furnishing", "Posts")

rownames(z) = NULL

#Plot

ggplot(z, aes(x=reorder(residency,Posts), y=Posts, fill=furnishing)) +

geom_bar(stat="identity") + coord_flip() +

scale_fill_brewer(palette="Dark2") +

xlab("Residency") + ggtitle("Number of posts per residency, with furnishing type") +

theme(plot.title=element_text(size=16, face = "bold", color = "Black")) +

theme(axis.text.y=element_text(face = "bold", color = "black"),

axis.text.x=element_text(face = "bold", color = "black"))

I think it’s safe to say that very few, if not none, of the listings have been labeled as unfurnished. I think that’s mainly because many of the owners/agents that post on these sort of sites classify a unit as semi-furnished if it only has things such as a fan, air-conditioner, and a kitchen cabinet; but, ironically enough, no actual furniture. From my experience, what is considered unfurnished in Malaysia is, quite literally, an empty unit…with wires still hanging off the ceiling and walls.

I thought it would also be interesting to see how the layouts are distributed in all of these listings.

#Create table for residency and layout columns

y = data.frame(table(final_fil[,c("residency", "layout")]))

y = y[y[,"Freq"] != 0,]

names(y) = c("residency", "layout", "Posts")

rownames(y) = NULL

#Plot

ggplot(y, aes(x=residency, y=Posts, fill=layout)) +

geom_bar(stat="identity") + coord_flip() +

scale_fill_brewer(palette="Dark2") +

xlab("Residency") + ggtitle("Number of posts per residency, with layouts") +

theme(plot.title=element_text(size=16, face = "bold", color = "Black")) +

theme(axis.text.y=element_text(face = "bold", color = "black"),

axis.text.x=element_text(face = "bold", color = "black"))

And that gives us…

I understand that it would’ve been better if the bars were in descending order, but i just couldn’t figure out how to do that. The usual reordering inside the “aes” did not work and i’m still trying to understand why. So for now, i figured ggplot2’s usual alphabetical order will suffice.

As you can clearly see, a big portion of all posts are categorized under 3-bedrooms. The most notable of which is the one for Vista Kiara which, if you recall, had the highest number of rental listings in our sample.

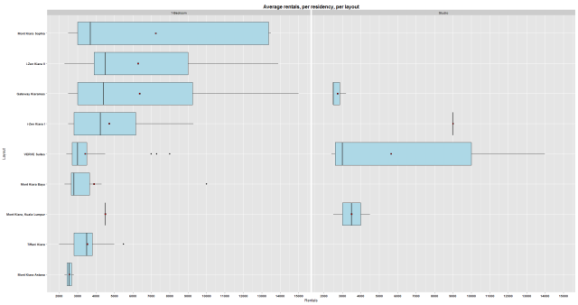

And this finally brings us to the numbers that matter. The rental rates. All numbers here are shown as rental per month. The following code is to determine the average rental per layout type in Mont Kiara. After having inspected the dataframes, it looks like there are plenty of outliers in each category. With that in mind, i think a boxplot would be best. And plus, It will be great to see how much would it cost to rent a unit of a particular room layout, on average; in Mont Kiara.

Breaks= c()

for(i in 1:30){Breaks[i] = i*1000}

ggplot(final_fil, aes(x=layout, y=rental)) + geom_boxplot() +

stat_summary(fun.y = "mean", geom="point", shape = 22, size = 3, fill = "red") +

xlab("Layout") + ggtitle("Distribution of rentals per layout") + ylab("Rentals") +

theme(plot.title=element_text(size=16, face = "bold", color = "Black")) +

theme(axis.text.y=element_text(face = "bold", color = "black"),

axis.text.x=element_text(face = "bold", color = "black")) +

scale_y_continuous(breaks=Breaks)

There seem to be a whole bunch of outliers for the 1-bedroom category, which explains why the mean (the red dot) is not so close to the median (the horizontal line in the box). The median would indicate that the average rental for a 1-bedroom might range somewhere around RM 3500. That conclusion seems to be concurred by the Studio category, since many people consider those two groups one and the same.

It’s also worth noting that there doesn’t seem to be much difference between the 1-bedroom and the 2-bedrooms, while the 8-bedroom category seems to be from one data point. I’m not exactly certain what’s the difference between a 4-bedroom and a 4.1 bedroom, but if the means are to be used, it’ll cost you about another RM 1000 in rent. The 3-bedroom, the category that seems to be readily available as we’ve seen in the previous charts; goes from 4500 to 5000 ringgit. I would think that’s still pretty steep, even if most of the listings are fully furnished.

I was hoping to end this post with a chart that shows the average rental for each residency, and for each layout; but i’m worried that it would end up as a lot of “visual noise”. Besides, i still need to figure out how to arrange the bars using ggplot2 in circumstances where i’m using 3 variables, of which 2 are categorical. The best that i could come up with is using the facet_grid() function in ggplot2. And so here they are, two at a time:

I haven’t plotted the charts for 4.1 bedrooms and 6+ bedrooms because they seem to be coming from very few observations, and so there really was no need to plot them.

As always below are all the data i worked with.

Propwall_link, Propwall_link_details_edited, Propwall_averages.

Tagged: data, programming, r, rstats, web scraping

R-bloggers.com offers daily e-mail updates about R news and tutorials about learning R and many other topics. Click here if you're looking to post or find an R/data-science job.

Want to share your content on R-bloggers? click here if you have a blog, or here if you don't.