Using Microsoft’s Emotion API to Settle an Old Argument

Want to share your content on R-bloggers? click here if you have a blog, or here if you don't.

So Tuesday happened. I have a lot to say about our President-elect, and you’ll probably see a couple posts about him in the next few weeks. But that’s for later. Today, I’m writing about a more pressing issue, one that has been subject to debate for years without resolution, and I plan to settle this debate once and for all.

Do I look angry in this picture?

Here’s the backstory: in my first semester of college in 2010, I took a drawing class. We were required to draw a self-portrait at the beginning of the class, then at the end, after having been trained. I had taken many drawing courses prior to the class and thought myself quite skilled, and I remember when I drew my first self-portrait thinking “How can I do better than this?” But a semester later, I drew this.

I remember after drawing this picture thinking “That’s how.”

Everyone who saw the picture was impressed, from my parents to my art professor (who begged me not to stop drawing; sadly, though, I have not drawn like this since that class, though I hope someday to recover those skills and draw again). I hung the picture on my bedroom door, and one morning, my little sister’s dog Fancy woke me up when she was barking frantically at it; it was so realistic it apparently frightened her.

Yet while the picture was impressive, there was a common reaction to it: I look angry. Everyone would ask me, “Why do you look so angry in this picture?” It even reached a point where my grandpa, when he saw it, pointed to it and said, “This is not you. This is not the person who you are.” (My professor, when I mentioned this to him, said what I managed to do was become so focused I captured that focus and intensity in the drawing.)

I can assure you that I was not angry while drawing this picture. Just consider some of the selfies I took that night:

No people, I was not angry, and I don’t have anger issues. (Well, I do have a deep anger at the universe for all the slights it dealt me and all the injustices in the world, but that’s a separate issue.)

Months ago, I saw this tutorial for using Microsoft’s Emotion’s API for detecting emotions in photos, using R. I thought the idea would be fun, and today I decided to see what the app would say about my drawing.

Here’s the result (using a lot of the code from the aforementioned tutorial; note that you will have to create an account and get a key to use the API):

library("httr")

library("XML")

library("stringr")

library("ggplot2")

library("reshape2")

# Define image source

img.url = 'https://ntguardian.files.wordpress.com/2016/11/selfportrait.jpg'

# Define Microsoft API URL to request data

URL.emoface = 'https://api.projectoxford.ai/emotion/v1.0/recognize'

# Define image

mybody = list(url = img.url)

# Request data from Microsoft

(faceEMO = POST(

url = URL.emoface,

content_type('application/json'), add_headers(.headers = c('Ocp-Apim-Subscription-Key' = emotionKEY)),

body = mybody,

encode = 'json'

))

## Response [https://api.projectoxford.ai/emotion/v1.0/recognize]

## Date: 2016-11-13 22:41

## Status: 200

## Content-Type: application/json; charset=utf-8

## Size: 264 B

# Request results from face analysis

(SelfPortrait = httr::content(faceEMO)[[1]])

## $faceRectangle

## $faceRectangle$height

## [1] 530

##

## $faceRectangle$left

## [1] 584

##

## $faceRectangle$top

## [1] 549

##

## $faceRectangle$width

## [1] 530

##

##

## $scores

## $scores$anger

## [1] 0.004670194

##

## $scores$contempt

## [1] 8.175469e-05

##

## $scores$disgust

## [1] 5.616587e-05

##

## $scores$fear

## [1] 0.0008795662

##

## $scores$happiness

## [1] 3.147202e-05

##

## $scores$neutral

## [1] 0.9871294

##

## $scores$sadness

## [1] 0.003053426

##

## $scores$surprise

## [1] 0.00409801

# Define results in data frame

o<-melt(as.data.frame(SelfPortrait$scores))

## Using as id variables

names(o) <- c("Emotion", "Level")

o

## Emotion Level

## 1 anger 4.670194e-03

## 2 contempt 8.175469e-05

## 3 disgust 5.616587e-05

## 4 fear 8.795662e-04

## 5 happiness 3.147202e-05

## 6 neutral 9.871294e-01

## 7 sadness 3.053426e-03

## 8 surprise 4.098010e-03

# Make plot

ggplot(data=o, aes(x=Emotion, y=Level, fill = Emotion)) +

geom_bar(stat="identity") +

scale_fill_brewer(palette = "Set3") +

ggtitle("Detected Emotions in Self Portrait") +

theme_bw()

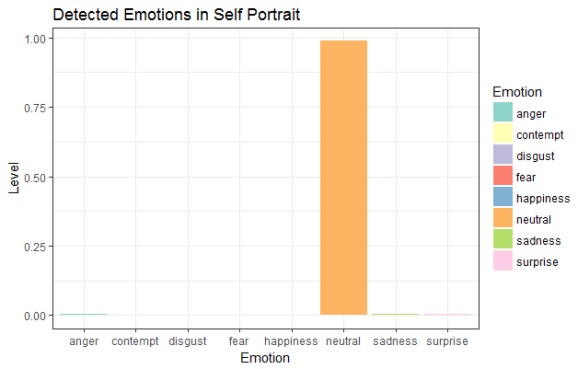

The API successfully detected the region of the picture where my face is located, and I’m confident it provides a reasonable analysis of the emotions seen. It appears there is only one emotion in the drawing that Microsoft detects: neutral. Lots and lots of neutral. I will also admit that there is an intensity in my expression that the API probably does not capture or quantify, and that intensity coupled with the shere “neutrality” of my expression perhaps is perceived as anger.

Will that end the discussion? Probably not. Computers see what they see, but what matters in the end is what people perceive, not computers; it’s to them emotions matter. Nevertheless, it’s nice to get some vindication from Microsoft; I was not angry!

R-bloggers.com offers daily e-mail updates about R news and tutorials about learning R and many other topics. Click here if you're looking to post or find an R/data-science job.

Want to share your content on R-bloggers? click here if you have a blog, or here if you don't.