The Golden Section Search Method: Modifying the Bisection Method with the Golden Ratio for Numerical Optimization

Want to share your content on R-bloggers? click here if you have a blog, or here if you don't.

Introduction

The first algorithm that I learned for root-finding in my undergraduate numerical analysis class (MACM 316 at Simon Fraser University) was the bisection method. It’s very intuitive and easy to implement in any programming language (I was using MATLAB at the time). The bisection method can be easily adapted for optimizing 1-dimensional functions with a slight but intuitive modification. As there are numerous books and web sites on the bisection method, I will not dwell on it in my blog post.

Instead, I will explain a clever and elegant way to modify the bisection method with the golden ratio that results in faster computation; I learned this method while reading “A First Course in Statistical Programming with R” by John Braun and Duncan Murdoch. Using a script in R to implement this special algorithm, I will illustrate how to minimize a non-differentiable function with the golden section search method. In a later post (for the sake of brevity), I will use the same method to show that the minimizer of the sum of the absolute deviations from a univariate data set is the median. The R functions and script for doing everything are in another post.

The Fibonacci spiral approximates the golden spiral, a logarithmic spiral whose growth factor is the golden ratio.

Source: Dicklyon via Wikimedia

Minimization with the Bisection Method

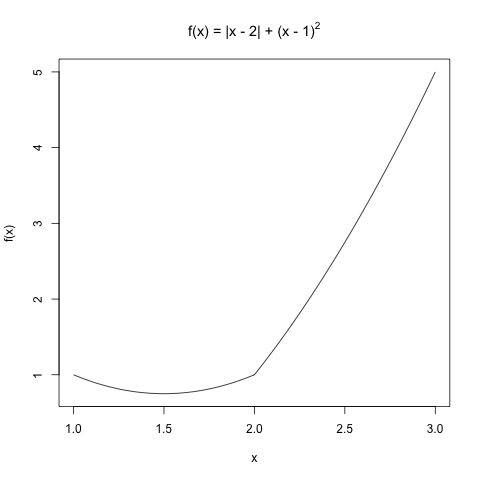

Assume that a single-variable continuous function as a unique minimum (and, thus, a unique minimizer) in a closed interval [a, b]. If this function is differentiable in [a, b], then calculus can be used easily to find the minimizer. However, if the function has a cusp or a kink, then it’s not differentiable at that point, and numerical methods are needed instead. For example, consider the following function.

Within the interval [1, 3], this function has a unique minimizer near x = 1.5, but it also has a cusp at x = 2. Thus, it is not differentiable at x = 2. A simple, stable, but slow numerical method to find the minimizer is the bisection method.

To use the bisection method to find the minimizer, we can

1) Pick 2 points, and

, with

and both points in [a, b].

2) Find and

.

3)

a) If , because this unique minimizer must be less than

, and the function is decreasing from

to

.

b) Otherwise (i.e. ), the minimizer must be to the left of

with logically analogous reasoning.

4)

i) If 3a is true, then the minimizer must be in the interval ; repeat the algorithm from Step 1 until the interval length is smaller than some pre-set tolerance.

ii) If 3b is true, then the minimizer must be in the interval ; repeat the algorithm from Step 1 until the interval length is smaller than some pre-set tolerance.

To summarize this algorithm, every iteration beings with

– the boundaries of the interval ,

– 2 test points for the argument, and

.

The function is evaluated at and

, and, based on whether

, a new set of boundaries and test points are set for the next iteration.

Using the Golden Ratio to Set the Test Points

Since the test points and

are arbitrarily set, it comes as no surprise that the choice of the test points affects the speed of the computation. A clever way to save computation time is to set the test points to take advantage of some special properties of the golden ratio, which Braun and Murdoch denote with

and has the value

This number has many special properties that you can easily find on the web. The one that will save computation time is

Let the lower and upper bounds of the interval of interest be and

, respectively. Now, let’s set the test points as

The advantage of setting the test points as these above values comes when one of the new test points is updated. Suppose that

. Thus,

becomes the new lower bound, which I will denote as

. The beauty of the golden ratio comes in calculating the new lower test point, which I will denote as

.

Since becomes the new lower bound.

Recall that

Substituting the right-hand side of this above equation for in the calculation of

, some very simple algebraic manipulation yields

Now, taking advantage of that special property of the golden ratio, , some more simple algebraic manipulation yields

Thus, the new lower test point is just the old upper test point. This saves computation time, because we don’t need to compute a new lower test point; we can just recycle the value that we knew from the old upper test point!

Similar logic for the case will show that the new upper test point is simply the old lower test point. Notationally, if

,

then

.

Using the Script to Numerically Minimize a Function

In another post, you can find the function for implementing this special golden search method and the script for minimizing the above function with the cusp. This script is basically the same as the one written by Braun and Murdoch on Pages 134-135 in their book. My script used slightly more self-evident variable names and included debugging statements to help me to identify why my function was not working properly when I first wrote it. Debugging statements are statements that show the values of key variables being computed in the flow of a script (and often within loops or branches) to identify the point in the flow at which the script stopped working properly. My mathematical and statistical programming skills became much better when I began using debugging statements. They are good for debugging, but should generally be commented out when the script is corrected and working properly. Since the problems that I am solving are quite simple, I kept them in the script and will show part of the output to illustrate why they are useful.

Recall the function with a cusp that I plotted above.

Using my function, the initial boundaries 1 and 3, and an absolute tolerance of , here is what my function, golden.section.search(), returned in the first iteration.

> golden.section.search(f, 1, 3, 1e-5) Iteration # 1 f1 = 0.8196601 f2 = 1.763932 f2 > f1 New Upper Bound = 2.236068 New Lower Bound = 1 New Upper Test Point = 1.763932 New Lower Test Point = 1.472136

This function used 26 iterations to get the final answer.

Iteration # 26 f1 = 0.75 f2 = 0.75 f2 > f1 New Upper Bound = 1.500003 New Lower Bound = 1.499995 New Upper Test Point = 1.5 New Lower Test Point = 1.499998 Final Lower Bound = 1.499995 Final Upper Bound = 1.500003 Estimated Minimizer = 1.499999

This is consistent with the “eye-balled” answer of from the plot.

Filed under: Applied Mathematics, Numerical Analysis, Plots, R programming, Statistical Computing Tagged: applied mathematics, bisection, bisection method, debugging, debugging statements, golden ratio, numerical analysis, numerical method, numerical methods, numerical optimization, optimization, R, R function, R programming, statistical computing

R-bloggers.com offers daily e-mail updates about R news and tutorials about learning R and many other topics. Click here if you're looking to post or find an R/data-science job.

Want to share your content on R-bloggers? click here if you have a blog, or here if you don't.