GSoC 2017 : Integrating biodiversity data curation functionality

Want to share your content on R-bloggers? click here if you have a blog, or here if you don't.

URL of project idea page: https://github.com/rstats-gsoc/gsoc2017/wiki/Integrating-biodiversity-data-curation-functionality

Introduction

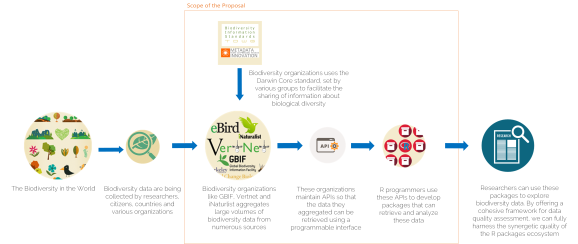

The importance of data in the biodiversity research has been repeatedly stressed in the recent times and various organizations have come together and followed each other to provide data for advancing biodiversity research. But, that is exactly where the main hiccup of biodiversity research lies. Since there are many such organizations, the data aggregated by these organizations vary in precision and in quality. Further, though in recent times more researchers have started to use R for their data analyses, since they need to retrieve, manage and assess data with complex (DwC) structure and high volume, only researchers with extremely sound R programming background have been able to attempt this.

Various R packages created so far have been focused on addressing some elements of the entire process. For example

- finch, rgbif and spocc for biodiversity data retrieval.

- taxize, traits, rredlist for taxonomical enrichment and cleaning

- biogeo, rgeospatialquality, scrubr, assertr for data cleaning

Thus when a researcher decides to use these tools, he needs to

- Know these packages exist

- Understand what each package does

- Compare and contrast packages offering same functionalities and decide the best for his needs

- Maintain compatibility between the packages and datasets transferred between packages.

What we propose:

We propose to create a R Package that will function as the main data retrieval, cleaning, management and backup tool to the researchers. The functionalities of the package are culmination of various existing packages and enhancements to existing functions rather than creating one from the scratch. This way we can cultivate the existing resources, collaborative knowledge and skills and also address the problem we identified efficiently. The package will also address the issue of researchers not having sound R programming skills.

Before we analyze solutions, it’s important to understand the stakeholders and scope of the project.

Proposed Package:

The package will cover major processes in the research pipeline.

- Getting Biodiversity data to the workspace

The biodiversity data can be read from existing DwC archive files in various formats (DwCA, XML, CSV) or it can be downloaded from online sources (GBIF, Vertnet). So functions to read local files in XML, DwCA and CSV formats, to download data directly from GBIF and to retrieve from respective APIs will be included. In case the user doesn’t know what data to retrieve the name suggestion functions will also be included. Converting common name to scientific name, scientific name to common name, getting taxon keys to names will also be covered. Further functions to convert to simple data frames to retrieve medias associated with occurrences will also be included. - Flagging the data

The biodiversity data is aggregated by various different organizations. Thus these data vary in precision and in quality. It is highly necessary to first check the quality of the data and strip the records which lacks the quality expected before using it. Various packages built thus far have been able to check data for various discrepancies such as scrubr and rgeospatialquality. Integrating functionalities given by such packages to produce a better quality control will benefit the community greatly. The data will be checked for following discrepancies- In spatial – Incorrect, impossible, incomplete and unlikely coordinates and invalid country and country codes

- In temporal – missing or incorrect dates in all time fields

- In taxonomic – epithet, scientific name and common name discrepancies and also fixing scientific names.

- And duplicate records of data will be flagged.

- Cleaning data

The process is done step by step to help user configure and control the cleaning process.

The data will be flagged first for various discrepancies. It can be any combination of spatial, temporal, taxonomic and duplicate flags as user specifies. Then the user can view the data he will be losing and decide if he wants to tweak the flags. In times when the data is high in volume, this procedural cleaning would help user for number of reasons.- When user wants multiple flags, he can apply each quality check one by one and decide if he wants to remove flagged data once he applies one check. If he is to apply all flags at once the records to be removed will be high in number and he has to put much effort to go through all of it to decide if he wants that quality check

- When user views the flagged data, not all fields will be shown. Only the fields the quality check was done on and the flagged result will be shown. This saves user from having to deal with all the complex fields in the original data and having to go to and forth the data to check the flags.

If he is satisfied with the records he will lose then, the data can be cleaned.

Ex:

# Step 01 biodiversityData %>% coordinateIncompleteFlag() %>% allFlags(taxonomic) #Step 02 viewFlaggedData() #Step 03 cleanAll() - Maintaining backups of dataThe original data user retrieved and any subsequent resultant data of his process can be backed up with versioning to maintain reproducibility. Functions for maintaining repositories, backing up to repositories, loading from repositories and achieving will be implemented.

Underneath, the package we plan to implement these:

- Standardization of data

The data retrieved will be standardized according to DwC formats. To maintain consistency and feasibility I have decided to use GBIF fields as the standard.

The reasons are,

- The GBIF uses DwC as the standardization and the fields from GBIF backbone complies with DwC terms.

- GBIF is a well-established organization and using the fields from their backbone will assure consistency and acceptance by researchers.

- GBIF is a superset of all major biodiversity data available. Any data gathered from GBIF can be expected to also be in other sources too.

- Unique fields grouping system for DwC fields, based on recommended grouping (see this and this)

Conclusion

We believe a centralized data retrieval, cleaning, management and backup tool will benefit the bio diversity research community and eradicate many short comes in the current research process. The package will be built over the course of next few months and any insights, guidance and contributions from the community will be greatly appreciated.

Feedback

Please give us your feedback, suggestions on github issues

R-bloggers.com offers daily e-mail updates about R news and tutorials about learning R and many other topics. Click here if you're looking to post or find an R/data-science job.

Want to share your content on R-bloggers? click here if you have a blog, or here if you don't.