Advent of 2020, Day 20 – Orchestrating multiple notebooks with Azure Databricks

Want to share your content on R-bloggers? click here if you have a blog, or here if you don't.

Series of Azure Databricks posts:

- Dec 01: What is Azure Databricks

- Dec 02: How to get started with Azure Databricks

- Dec 03: Getting to know the workspace and Azure Databricks platform

- Dec 04: Creating your first Azure Databricks cluster

- Dec 05: Understanding Azure Databricks cluster architecture, workers, drivers and jobs

- Dec 06: Importing and storing data to Azure Databricks

- Dec 07: Starting with Databricks notebooks and loading data to DBFS

- Dec 08: Using Databricks CLI and DBFS CLI for file upload

- Dec 09: Connect to Azure Blob storage using Notebooks in Azure Databricks

- Dec 10: Using Azure Databricks Notebooks with SQL for Data engineering tasks

- Dec 11: Using Azure Databricks Notebooks with R Language for data analytics

- Dec 12: Using Azure Databricks Notebooks with Python Language for data analytics

- Dec 13: Using Python Databricks Koalas with Azure Databricks

- Dec 14: From configuration to execution of Databricks jobs

- Dec 15: Databricks Spark UI, Event Logs, Driver logs and Metrics

- Dec 16: Databricks experiments, models and MLFlow

- Dec 17: End-to-End Machine learning project in Azure Databricks

- Dec 18: Using Azure Data Factory with Azure Databricks

- Dec 19: Using Azure Data Factory with Azure Databricks for merging CSV files

Yesterday we were looking into ADF and how to create a pipelines and connect different Azure services.

Today, we will look into connecting multiple notebooks and trying to create orchestration or a workflow of several notebooks.

- Creating a folder with multiple notebooks

In Azure Databricks workspace, create a new Folder, called Day20. Inside the folder, let’s create couple of Notebooks:

- Day20_NB1

- Day20_NB2

- Day20_functions

- Day20_Main

- Day20_NB3_Widget

And all are running Language: Python.

2.Concept

The outline of the notebooks is the following:

Main notebook (Day20_Main) is the one, end user or job will be running all the commands from.

First step is to executed is to run notebook Day20_1NB, which is executed and until finished, the next code (or step) on the main notebook will not be executed. Notebook is deliberately empty, mimicking the notebook that does the task, that are independent from any other steps or notebooks.

Second step is to load Python functions with notebook: Day20_functions. This notebook is just a collection (or class) of user defined functions. Once the notebooks is executed, all the functions will be declared and available in workspace for current user, through all notebooks.

Third step is to try and run couple of Python functions in main notebook (Day20_Main). Nevertheless the simplicity of Python notebooks, they serve the purpose and show the ability how to use and run it.

Fourth step is to create a persistent element for storing results in Notebooks. I created a table day20_NB_run where timestamp, ID, comments are stored and will be executed at the end of every non-main notebook (to serve for multiple purposes – feedback information for next step, triggering next step, logging, etc.) Table is created in database Day10 (created on Day10) and re-created (DROP and CREATE) every time the notebook is executed.

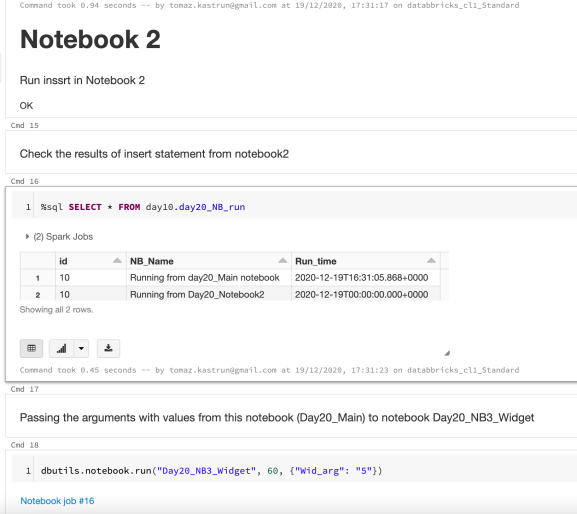

Fifth step is executing notebook Day20_2NB that stores end result to table day20_NB_run for logging purposes or troubleshooting.

Sixth step is a different one, because we want to have back and forth communication between main notebook (Day20_Main) and “child” notebook (Day20_NB3_Widget). This can be done using Scala Widgets. This notebook has a predefine set of possible values, that are called as argument-key entity from the main (Day20 main) notebook. Once the widget value is executed against the set of code, it can also be stored in database (what I did in demo) or return it (pass it) to main notebook in order to execute additional task based on new values.

3.Notebooks

The insights of the notebooks.

Day20_NB1 – this one is no brainer.

Day20_functions – is the collection of Python functions:

with the sample code:

def add_numbers(x,y):

sum = x + y

return sum

import datetime

def current_time():

e = datetime.datetime.now()

print ("Current date and time = %s" % e)

Day20_NB2 – is the notebook that outputs the results to SQL table.

And the SQL code:

%sql INSERT INTO day10.day20_NB_run VALUES (10, "Running from Day20_Notebook2", CAST(current_timestamp() AS TIMESTAMP))

Day20_NB3_Widget – is the notebook that receives the arguments from previous step (either notebook, or CRON or function) and executes the steps with this input information accordingly and stores results to SQL table.

And the code from this notebook. This step creates a widget on the notebook.

dbutils.widgets.dropdown("Wid_arg", "1", [str(x) for x in range(1, 10)])

Each time a number is selected, you can run the command to get the value out:

selected_value = dbutils.widgets.get("Wid_arg")

print("Returned value: ", selected_value)

And the last step is to insert the values (and also the result of the widget) into SQL Table.

%sql INSERT INTO day10.day20_NB_run VALUES (10, "Running from day20_Widget notebook", CAST(current_timestamp() AS TIMESTAMP))

Day20_Main – is the umbrella notebook or the main notebook, where all the orchestration is carried out. This notebook also holds the logic behind the steps and it’s communication.

Executing notebook from another (main) notebook, is in this notebook Day20_Main done by using this command (%run and path_to_notebook).

%run /Users/[email protected]/Day20/Day20_functions

SQL Table is the easier part, when defining table and roles.

%sql DROP TABLE IF EXISTS day10.day20_NB_run; CREATE TABLE day10.day20_NB_run (id INT, NB_Name STRING, Run_time TIMESTAMP) %sql INSERT INTO day10.day20_NB_run VALUES (10, "Running from day20_Main notebook", CAST(current_timestamp() AS TIMESTAMP))

For each step in between, I am checking the values in SQL Table, giving you the current status, and also logging all the steps on Main notebook.

Command to execute notebook with input parameters, meaning that the command will run the Day20_NB3_Widget notebook. And this should have been trying to max 60 seconds. And the last part is the collection of parameters, that are executed..

dbutils.notebook.run("Day20_NB3_Widget", 60, {"Wid_arg": "5"})

We have seen that the orchestration has the capacity to run in multiple languages, use input or output parameters and can be part of larger ETL or ELT process.

Tomorrow we will check and explore how to go about Scala, since we haven’t yes discussed anything about Scala, but yet mentioned it on multiple occasions.

Complete set of code and Notebooks will be available at the Github repository.

Happy Coding and Stay Healthy!

R-bloggers.com offers daily e-mail updates about R news and tutorials about learning R and many other topics. Click here if you're looking to post or find an R/data-science job.

Want to share your content on R-bloggers? click here if you have a blog, or here if you don't.