Advent of 2020, Day 18 – Using Azure Data Factory with Azure Databricks

Want to share your content on R-bloggers? click here if you have a blog, or here if you don't.

Series of Azure Databricks posts:

- Dec 01: What is Azure Databricks

- Dec 02: How to get started with Azure Databricks

- Dec 03: Getting to know the workspace and Azure Databricks platform

- Dec 04: Creating your first Azure Databricks cluster

- Dec 05: Understanding Azure Databricks cluster architecture, workers, drivers and jobs

- Dec 06: Importing and storing data to Azure Databricks

- Dec 07: Starting with Databricks notebooks and loading data to DBFS

- Dec 08: Using Databricks CLI and DBFS CLI for file upload

- Dec 09: Connect to Azure Blob storage using Notebooks in Azure Databricks

- Dec 10: Using Azure Databricks Notebooks with SQL for Data engineering tasks

- Dec 11: Using Azure Databricks Notebooks with R Language for data analytics

- Dec 12: Using Azure Databricks Notebooks with Python Language for data analytics

- Dec 13: Using Python Databricks Koalas with Azure Databricks

- Dec 14: From configuration to execution of Databricks jobs

- Dec 15: Databricks Spark UI, Event Logs, Driver logs and Metrics

- Dec 16: Databricks experiments, models and MLFlow

- Dec 17: End-to-End Machine learning project in Azure Databricks

Yesterday we did end-to-end Machine Learning project. Almost, one can argue. What if we want to incorporate this notebook in larger data flow in Azure. In this case we would need Azure Data Factory (ADF).

Azure Data Factory is Azure service for ETL operations. It is a serverless service for data transformation and data integration and orchestration across several different Azure services. There are also some resemblance to SSIS (SQL Server Integration Services) that can be found.

On Azure portal (not on Azure Databricks portal) search for “data factory” or “data factories” and you should get the search recommendation services. Select “Data factories” is what we are looking for.

Once on tha page for Data factories, select “+ Add” to create new ADF. Insert the needed information:

- Subscription

- Resource Group

- Region

- Name

- Version

I have selected the same resource group as the one for Databricks and name, I have given it ADF-Databricks-day18.

Select additional information such as Git configuration (you can also do this part later), Networking for special configurations, Advanced settings, add tags and create a ADF. Once the service is completed with creation and deployment, jump into the service and you should have the dashboard for this particular service

Select “Author & Monitor” to get into the Azure Data Factory. You will be redirected to a new site:

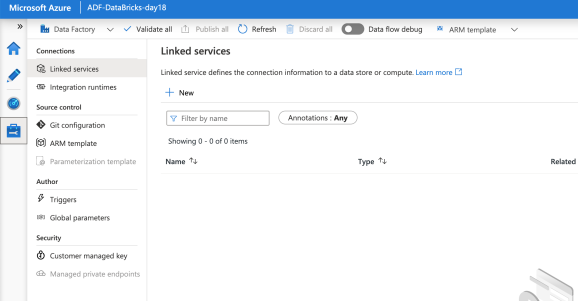

On the left-hand site, you will find a vertical navigation bar. Look for the bottom icon, that looks like a tool-box and is for managing the ADF:

You will get to the setting site for this Data factory.

And we will be creating a new linked service. Linked service that will enable communication between Azure Data factory and Azure Databricks. Select “+ New” to add new Linked Services:

On your right-hand side, the window will pop-up with available services that you want to link to ADF. Either search for Azure Databricks, or click “compute” (not “storage”) and you should see Databricks logo immediately.

Click on Azure Databricks and click on “Continue”. You will get a list of information you will need to fill-in.

All needed information is relatively straight-forward. Yet, there is the authentication, we still need to fix. We will use access token. On Day 9 we have used Shared Access Signature (SAS), where we needed to make a Azure Databricks tokens. Open a new window (but do not close ADF Settings for creating a new linked service) in Azure Databricks and go to settings for this particular workspace.

Click on the icon (mine is: “DB_py” and gliph) and select “User Settings”.

You can see, I have a Token ID and secret from Day 9 already in the Databricks system. So let’s generate a new Token by clicking “Generate New Token“.

Give token a name: “adf-db” and lifetime period. Once the token is generated, copy it somewhere, as you will never see it again. Of course, you can always generate a new one, but if you have multiple services bound to it, it is wise to store it somewhere secure. Generate it and copy the token (!).

Go back to Azure Data Factory and paste the token in the settings. Select or fill-in the additional information.

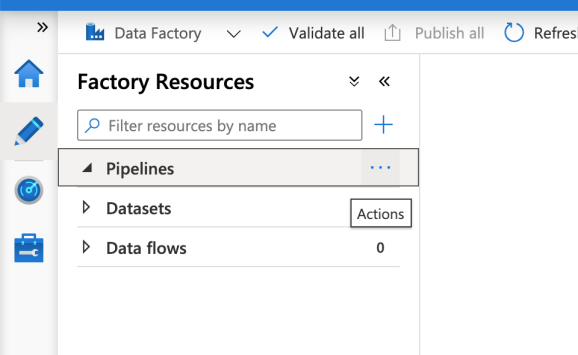

Once the linked server is created, select the Author in the left vertical menu in Azure Data Factory.

This will bring you a menu where you can start putting together a pipeline. But in addition, you can also register in ADF the datasets and data flows; this is especially useful when for large scale ETL or orchestration tasks.

Select Pipeline and choose “New pipeline”. You will be presented with a designer tool where you can start putting together the pipelines, flow and all the orchestrations.

Under the section of Databricks, you will find:

- Notebooks

- Jar

- Python

You can use Notebooks to build the whole pipelines and help you communicate between notebooks. You can add an application (Jar) or you can add a Python script for carrying a particular task.

Drag and drop the elements on to the canvas. Select Notebook (Databricks notebook), drag the icon to canvas, and drop it.

Under the settings for this particular Notebook, you have a tab “Azure Databricks” where you select the Linked server connection. Chose the one, we have created previously. Mine is named as “AzureDatabricks_adf”. And under setting select the path to the notebook:

Once you finish with entering all the needed information, remember to hit “Publish all” and if there is any conflict between code, we can just and fix it immediately.

You can now trigger the pipeline, you can schedule it or connect it to another notebook by selecting run or debbug.

In this manner you can schedule and connect other services with Azure Databricks. Tomorrow, we will look into adding a Python element or another notebook to make more use of Azure Data factory.

Complete set of code and Notebooks will be available at the Github repository.

Happy Coding and Stay Healthy!

R-bloggers.com offers daily e-mail updates about R news and tutorials about learning R and many other topics. Click here if you're looking to post or find an R/data-science job.

Want to share your content on R-bloggers? click here if you have a blog, or here if you don't.