Markov chain Monte Carlo doesn’t “explore the posterior”

Want to share your content on R-bloggers? click here if you have a blog, or here if you don't.

First some background, then the bad news, and finally the good news.

Spoiler alert: The bad news is that exploring the posterior is intractable; the good news is that we don’t need to explore all of it.

Sampling to characterize the posterior

There’s a misconception among Markov chain Monte Carlo (MCMC) practitioners that the purpose of sampling is to explore the posterior. For example, I’m writing up some reproducible notes on probability theory and statistics through sampling (in pseudocode with R implementations) and have just come to the point where I’ve introduced and implemented Metropolis and want to use it to exemplify convergence mmonitoring. So I did what any right-thinking student would do and borrowed one of my mentor’s diagrams (which is why this will look familiar if you’ve read the convergence monitoring section of Bayesian Data Analysis 3).

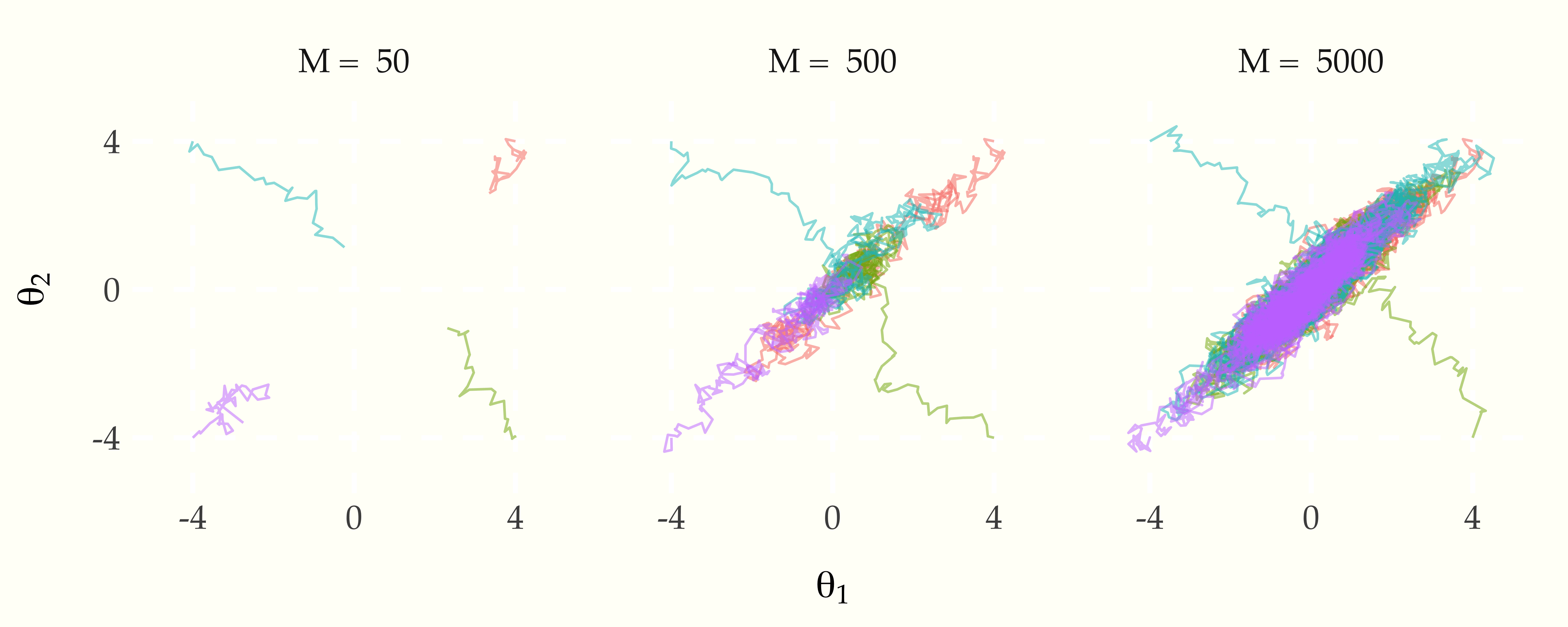

First M steps of of isotropic random-walk Metropolis with proposal scale normal(0, 0.2) targeting a bivariate normal with unit variance and 0.9 corelation. After 50 iterations, we haven’t found the typical set, but after 500 iterations we have. Then after 5000 iterations, everything seems to have mixed nicely through this two-dimensional example.

This two-dimensional traceplot gives the misleading impression that the goal is to make sure each chain has moved through the posterior. This low-dimensional thinking is nothing but a trap in higher dimensions. Don’t fall for it!

Bad news from higher dimensions

It’s simply intractable to “cover the posterior” in high dimensions. Consider a 20-dimensional standard normal distribution. There are 20 variables, each of which may be positive or negative, leading to a total of , or more than a million orthants (generalizations of quadrants). In 30 dimensions, that’s more than a billion. You get the picture—the number of orthant grows exponentially so we’ll never cover them all explicitly through sampling.

Good news in expectation

Bayesian inference is based on probability, which means integrating over the posterior density. This boils down to computing expectations of functions of parameters conditioned on data. This we can do.

For example, we can construct point estimates that minimize expected square error by using posterior means, which are just expectations conditioned on data, which are in turn integrals, which can be estimated via MCMC,

where are draws from the posterior

If we want to calculate predictions, we do so by using sampling to calculate the integral required for the expectation,

If we want to calculate event probabilities, it’s just the expectation of an indicator function, which we can calculate through sampling, e.g.,