Revisiting MPs’ Expenses

Want to share your content on R-bloggers? click here if you have a blog, or here if you don't.

I couldn’t but notice the chatter about Iain Duncan Smith claiming he’d have no problem “living on 53 pounds a dayweek“, which made me wonder not only how many meal catered events he attends each week (and how many of his scheduled meeting also have complementary tea and biscuits (a bellweather of the extent of cuts in many institutions…;-), but also how he fares on the expenses stakes…

For the last couple of years, details about MPs’ expense claims have been published via the Independent Parliamentary Standards Authority (IPSA) website. The data seems to be most easily grabbed as CSV files containing all MPs’ claims for a parliamentary session (or tax year?) – eg 2012/13 or 2011/2012. As you might expect, that means the files are relatively large – 20MB (~100,000 rows) for 12/13, or just over 40MB (~190k rows) for 2011/12.

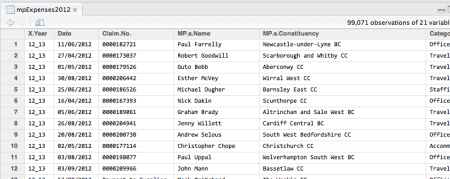

Files of this size are fine if you’re happy working with files of this size (?!), but can be a pain if you aren’t… So here are a couple of ways getting the data into a more manageable form, starting from raw data files that look something like this…

The file is made up of a series of rows, one per expense entry, with common columns. If we loaded this data into a spreadsheet application such as Excel or Google Spreadsheets, we’d see a single sheet containing however many tens of thousands of rows of data. Assuming that the application could cope with loading such a large amount of data of course… which it might not be able to do…which means we may need to make the data file a bit more manageable, somehow…

Let’s start with grabbing data relating to Iain Duncan Smith’s expense claims. On a Linux box, or a Mac, this is easy enough from a Terminal command line. (On Windows, something like cygwin should provide you with equivalents of some of the more useful unix tools.) For example, the grep command let’s us pull just the rows that contain the phrase Iain Duncan Smith:

grep "Iain Duncan Smith" DataDownload_2012.csv > IDS_expenses_2012.csv

This reads along the lines of: search through the file “DataDownload_2012.csv” looking for rows that contain “Iain Duncan Smith”, then copy those rows and only those rows into the file “IDS_expenses_2012.csv”

For what it’s worth, I’ve posted IDS’ expenses data from 2011 and 2012 to a couple of Google Fusion Tables: 2011/12, 2012/13

Another way of wrangling the data into a state we can start to play with it is to load it into RStudio, where we can start applying magical R incantations to it.

mpExpenses2012 = read.csv("~/Downloads/DataDownload_2012.csv")

We can then generate a subset of the data containing just IDS’ data:

ids2012=subset(mpExpenses2012, MP.s.Name=="Iain Duncan Smith")

We can also generate a combined data set of IDS’ expense claims from both 2011/12 and 2012/13, for example:

mpExpenses2011 = read.csv("~/Downloads/DataDownload_2011.csv")

ids2011=subset(mpExpenses2011, MP.s.Name=="Iain Duncan Smith")

ids11and12=rbind(ids2011,ids2012)

However, all is not well…

On loading the 2011 data into R, 158320 observations are loaded in. The actual number of rows (including the header – so one more row than the number of “observations”) can be given by running a line count (from the terminal/command line) over the original file:

wc -l DataDownload_2011.csv

187447 DataDownload_2011.csv

That is, 187447 rows…

If we try to pull out a list of MPs’ names using the levels command:

mpNames=data.frame(name=levels(mpExpenses2011$MP.s.Name))

we find that as well as the expected MPs names, there’s some “bad” data:

(What we expect to see in the name column is a list of MP names, one unique name per row.)

This is, of course, the way of the world. Folk who publish data rarely if ever, provide a demonstration of how to actually open it cleanly into an application (typically because data publishers think that once they have published the data, it’s bound to be all right and doesn’t need checking. This is, however, rarely true…although, for the 2012/13 data, there are 99071 loaded observations against 99072 (including 1 header) rows in the download file, which does seem to be correct).

What we should do now, of course, is go into a an ETL (or at least, TL) debug mode on the 2011 data and try to figure out what’s going wrong with the simple import… or we just work with the data we have and try to work around the dodgy rows…

…or we limit ourselves to the 2012 data, which does seem okay…

So if we do that, what other sorts of investigation come to mind?

One thing that came to mind after skimming the data…

was a “rail travel fares according to MPs’ expenses” lookup table.

So for example, we might start by creating a subset of the data based on expenses categorised as “Travel” and then look to see what sorts of trvel classification falls within that Category:

travel=droplevels(subset(mpExpenses2012,Category=="Travel"))

levels(travel$Expense.Type)

Here’s what we get:

[1] "Car Hire" "Car Hire Fuel" "Car Hire Fuel MP" [4] "Car Hire Fuel MP Staff" "Car Hire Insurance MP Staff" "Car Hire MP" [7] "Car Hire MP Staff" "Congest. Zone/Toll Seas Ticket" "Congestion Zone/Toll" [10] "Congestion Zone/Toll Dependant" "Congestion Zone/Toll MP" "Congestion Zone/Toll MP Staff" [13] "Food & Drink" "Food & Drink @ Parliament" "Food & Drink @ Parlmnt OFF Est" [16] "Food & Drink MP" "Food & Drink MP Staff" "Hotel Late Sitting" [19] "Hotel Late Sitting > 1.00" "Hotel London Area MP Staff" "Hotel NOT London Area (Travel)" [22] "Hotel NOT London Area MP Staff" "Hotel Outside UK" "Own Bicycle MP" [25] "Own Car Dependant" "Own Car MP" "Own Car MP Staff" [28] "Own Vehicle Bicycle" "Own Vehicle Bicycle MP Staff" "Own Vehicle Car" [31] "Own Vehicle Car Dependant" "Own Vehicle Car MP Staff" "Own Vehicle Mot Cycle MP Staff" [34] "Parking" "Parking Dependant" "Parking MP Staff" [37] "Parking Season Ticket" "Public Tr AIR" "Public Tr AIR Dependant" [40] "Public Tr AIR MP Staff" "Public Tr BUS" "Public Tr BUS MP Staff" [43] "Public Tr COACH" "Public Tr COACH MP Staff" "Public Tr FERRY" [46] "Public Tr FERRY MP Staff" "Public Tr OTHER" "Public Tr OTHER Dependant" [49] "Public Tr OTHER MP Staff" "Public Tr RAIL - RTN" "Public Tr RAIL - SGL" [52] "Public Tr RAIL Dependant - RTN" "Public Tr RAIL Dependant - SGL" "Public Tr RAIL Foreign" [55] "Public Tr RAIL MP Staff - RTN" "Public Tr RAIL MP Staff - SGL" "Public Tr RAIL Other" [58] "Public Tr RAIL Other Dependant" "Public Tr RAIL Other MP Staff" "Public Tr RAIL Railcard" [61] "Public Tr RAIL Railcd MP Staff" "Public Tr RAIL Sleeper Suppl" "Public Tr Season Ticket" [64] "Public Tr UND" "Public Tr UND Dependant" "Public Tr UND MP Staff" [67] "Public Tr Underground MP" "Taxi" "Taxi After Late Sitting" [70] "Taxi after Late Sitting 11pm" "Taxi Dependant" "Taxi MP" [73] "Taxi MP Staff" "Taxi Working Late After 9pm" "Taxi Working Late Before 9pm"

We might further pull out just the rows relating to rail travel (almost 10,000 rows from the 2012/13 dataset):

rail=droplevels(subset(travel,grepl("Rail",Expense.Type,ignore.case=TRUE)))

and then we might start looking to see who’s travelling First vs. who’s travelling Standard, as well as building up a database of rail fares between locations as claimed on expenses. But that’s for another day…

R-bloggers.com offers daily e-mail updates about R news and tutorials about learning R and many other topics. Click here if you're looking to post or find an R/data-science job.

Want to share your content on R-bloggers? click here if you have a blog, or here if you don't.