Decoding a Substitution Cipher using Simulated Annealing

Want to share your content on R-bloggers? click here if you have a blog, or here if you don't.

My last post discussed a method to decode a substitution cipher using a Metropolis-Hastings algorithm. It was brought to my attention that this code could be improved by using Simulated Annealing methods to jump around the sample space and avoid some of the local maxima. Here is a basic description of the difference:

In a basic Metropolis-Hastings algorithm, it is relatively unlikely that we will jump to states of the system which decrease our cost function (note: we usually try to decrease the cost function, but in the code below our goal is to maximize it instead). The algorithm greedily tries to attain a maximum value of the cost function, and thus is very prone to hitting local maxima instead of the global one.

In contrast, when we use simulated Annealing methods we are much more likely to jump to “worse” states during early iterations. This is because in the early stages, we want to jump around the sample space and try to find where the global maximum will occur. We can also build a characteristic into the algorithm which will “cool-off” as the algorithm iterates. What I mean by this is that our likelihood to jump to “worse” states will slowly decrease as the algorithm iterates.

In a standard Metropolis-Hastings algorithm, we jump to state with probability

, when

. In my Annealing Method to decode a cipher, I first fix a temperature

, and move to state

with probability

when

, where

is the number of iterations and

is the current iteration number. The reasoning behind this acceptance probability is that at first, we are basically multiplying the acceptance probability by

, and by the end we are basically not changing the acceptance probability at all from

. Try plugging in

, and

for

to verify this. The result is the following transition probability function, which can perform both the Annealing and Metropolis-Hasting probability calculations.

# Function to calculate the transition probability.

Prob = function(pl.fstar, pl.f, temp, i, iter, method = "Anneal") {

if(method == "Anneal") {

flip = (pl.fstar/pl.f)*temp/((temp - 1)*(i/iter) + (iter-i)/iter)

}

else{

flip = pl.fstar/pl.f

}

return(flip)

}

An additional variable that I added was a method of changing neighbors. In very large sample spaces, it can take a lot of transpositions to move from one state to another. In fact, the furthest state from any given state is , where $n$ is the length of the permutation. In our case, that is 1326 moves. The chance that the previous algorithm would get stuck in a local maximum en route is very high. We combat this by allowing the neighbors of any particular permutation to be farther away. Consider the following neighbor-generating function.

# Function to generate a new candidate function.

# Inputs:

# f: the function we want to find a neighbor of.

# N: the domain size of f

# top: the maximum number of elements to transpose.

Neighbor = function(f, N = length(f), top = 2) {

# Decode how many elements to switch (between 2 and top)

if(top == 2) {

num = 2

}

else {

num = sample(2:top, 1)

}

# Which elements to switch?

swit = sample(1:N, num)

# Decide how to transpose our elements

if(num == 2) {

ord = 2:1

}

else {

ord = sample(1:num)

}

# Prevent the case where we do not

# switch any elements.

while(all(ord == 1:num)) {

ord = sample(1:num)

}

# fstar will be a neighbor of f

fstar = f

fstar[swit] = f[swit[ord]]

return(fstar)

}

We supply the function with the maximum number of elements, , it is allowed to transpose, and it decides to transpose between 2 and

elements, at random (uniformly). It then decides on a random ordering of that transposition (there are a lot of ways to move around 10 different elements, for example). The function has a check to ensure that it does not simply output the same function as was input.

Another idea I came up with to allow the algorithm to zero-in on a good solution was to make the algorithm start doing single-transpositions eventually. This allows us to fine-tune our “good” solution in the later iterations of the algorithm. In the code, we use the “attack” parameter to determine how quickly we begin our fine-tuning of our solution. Small values of “attack” correspond with a lot of jumping around throughout the iterations, while high values correspond with us fine-tuning our algorithm earlier on in the iterations. The result is our final algorithm-decoding function.

# Generate the plausability of fstar

new.pl <- function(fstar, converted, len, trans) {

test.mat = fstar[converted]

compare = matrix(c(test.mat[1:(len -1)], test.mat[2:len]), ncol = 2)

pl.fstar = prod(trans[compare])

return(pl.fstar)

}

# Inputs:

# trans: a matrix of transition probabilities

# dictionary: list of possible characters

# message: the secret message

# iter: number of iterations

# temp: the initial temperature of the system

# attack: parameter to determine how quickly we

# approach single-transposition versus

# multiple-transpositions. High values

# of "attack" will cause us to rapidly approach

# single-transpositions.

DecodeMessage = function(trans, dictionary, message, iter = 1000,

temp = 10, method = "Anneal",

attack = 1) {

# Size of the dictionary

N = length(dictionary)

# Number of characters in the message.

len = length(message)

# Convert the matrix to the cooresponding numeric value in

# the dictionary.

converted = 0*(1:len)

for(i in 1:len) {

converted[i] = which(dictionary == message[i])

}

# Take a random guess at the permutation:

# f will be 'permanent', fstar will be a guess.

f = sample(N, N)

fstar = f

best.guess = f

# Keep track of the plausability of the best guess,

# and of the current state of the system.

pl.f = 0*(1:iter)

pl.f.max = 0

# There is no reason to not gerate all of the

# random numbers at once, since they are independent.

test.val = runif(iter, 0, 1)

for(i in 1:iter) {

pl.fstar = new.pl(fstar, converted, len, trans)

# flip is the probability that we switch to

# fstar. Note that this function will output

# values greater than 1, in which case we will always

# switch.

flip = Prob(pl.fstar, pl.f[i], temp, i, iter, method)

if(flip >= test.val[i]) {

f = fstar

pl.f[i] = pl.fstar

}

# Keep track of the best over-all guess.

if(pl.f[i] > pl.f.max) {

best.guess = f

pl.f.max = pl.f[i]

}

# Periodically output the current state

# of the decoding candidates.

if(i %% 10000 == 0) {

cat(dictionary[f[converted]][1:44])

cat("\n")

}

# Generate the "top" input for the Neighbor function.

top = round(2 + (N - 2)*((iter - i)/iter)^attack)

# Generate a new candidate function,

# and initiate next pl.f value.

fstar = Neighbor(f, N, top)

pl.f[i + 1] = pl.f[i]

}

return(list(current = dictionary[f[converted]],

pl.f = pl.f,

best.guess = dictionary[best.guess[converted]],

best.pl = pl.f.max))

}

Here we read in the data, and format the transitions matrix (to prevent infinity and 0 values of the plausibility function).

# Read in the matrix of transitions

trans = as.matrix(read.csv("http://www2.research.att.com/~volinsky/DataMining/Columbia2011/HW/KennyMCMC/wp_transition_counts.csv",

header = FALSE))

# Vector of all possible characters

dictionary = scan("http://www2.research.att.com/~volinsky/DataMining/Columbia2011/HW/KennyMCMC/wp_char_vector_sort.txt",

what = character(), sep = "\n")

# The secret message

message = scan("http://www2.research.att.com/~volinsky/DataMining/Columbia2011/HW/KennyMCMC/message1.txt",

what = character())

# Separate the message into individual characters

message = unlist(strsplit(do.call(paste, as.list(message)), NULL))

# We change the probabilities of transition so that there are

# no zero-values using a sort of Laplacian smoothing, and

# find something to multiply the values by so that we dont get 0

# probability (restrictions on Rs computational precision)

fixed.trans1 = log(trans + 1)

fixed.trans1 = 50*(fixed.trans1 + 1)/(rowSums(fixed.trans1) + 51)

# Set a seed to make this reproducible...

set.seed(8675309)

# Set a different, random seed, as to avoid some bias

# (your random number will still be the same as mine here)

set.seed(runif(1, 0, 999999))

Here is a couple of sample calls of the function. One uses the standard Metropolis-Hastings algorithm with the varying neighbor-generating function; the other uses the Annealing method and the varying neighbor-generating function. I semi-arbitrarily chose “attack” to be 10 here, and temp to be 100000

DecodeMessage(fixed.trans1, dictionary, message,

iter = 100000, temp = 10000,

method = "Anneal", attack = 10) -> annel

u o t e s c o s p t c i t - o h e s t i s t c l o t n e d c t i n t n o - d ' a d y t

z a t e s c a s p t c o t - a k e s t o s t c h a t u e l c t o u t u a - l y i l b t

w a t e n c a n p t c o t g a d e n t o n t c h a t f e r c t o f t f a g r u i r y t

r e i n t e n d t o k e v i n o n t h e w i s p t o w w e k s u a s f

w e i n c e n d c o r e g i n o n c h e m i s t c o m m e r s u a s y

w e i n c e n d c o b e g i n o n c h e f i s t c o f f e b s u a s y

w e i n t e n d t o b e g i n o n t h e f i l s t o f f e b l u a l y

w e i n t e n d t o b e g i n o n t h e f i r s t o f f e b r u a r y

w e i n t e n d t o r e g i n o n t h e v i s f t o v v e r s u a s y

w e i n t e n d t o p e g i n o n t h e f i l s t o f f e p l u a l z

DecodeMessage(fixed.trans1, dictionary, message,

iter = 100000, method = "Metrop",

attack = 10) -> metrop

p i a n s i n v s o c i g a n o n s h i f a d m s o f f i c d u e d w

k e i n c e n f c o y e g i n o n c h e w i s t c o w w e y s u a s "

k e i n c e n d c o w e g i n o n c h e f i s t c o f f e w s u a s y

w e i n t e n d t o b e g i n o n t h e f i r s t o f f e b r u a r y

w e i n t e n d t o b e g i n o n t h e f i l s t o f f e b l u a l y

w e i n t e n d t o y e g i n o n t h e f i r s t o f f e y r u a r v

w e i n t e n g t o p e d i n o n t h e v i r f t o v v e p r u a r k

w e i n t e n v t o b e g i n o n t h e f i r s t o f f e b r u a r y

w e i n t e n d t o z e k i n o n t h e f i l s t o f f e z l u a l y

w e i n t e n d t o b e g i n o n t h e f i r s t o f f e b r u a r y

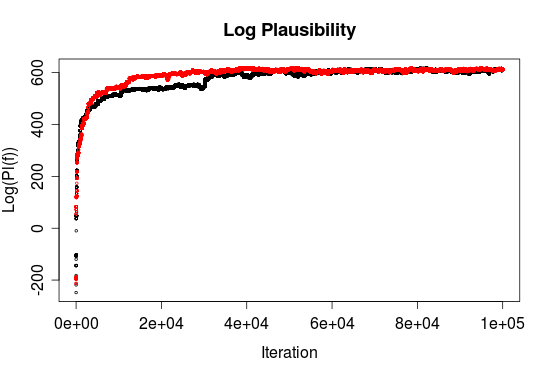

We get the following graph of the Plausibility, for each method. The black is the Annealing method, the red is the Metropolis method.

Note that the Annealing method drops down more often, but the two eventually converge to similar values. Here are the final decodings of the message by the different methods:

> cat(annel$best.guess, fill = 60) w e i n t e n d t o b e g i n o n t h e f i r s t o f f e b r u a r y u n r e s t r i c t e d s u b m a r i n e w a r f a r e ! w e s h a l l e n d e a k o r i n s p i t e o f t h i s t o v e e p t h e u n i t e d s t a t e s o f a m e r i c a n e u t r a l ! i n t h e e k e n t o f t h i s n o t s u c c e e d i n g , w e m a v e m e x i c o a p r o p o s a l o f a l l i a n c e o n t h e f o l l o w i n g b a s i s ? m a v e w a r t o g e t h e r , m a v e p e a c e t o g e t h e r , g e n e r o u s f i n a n c i a l s u p p o r t a n d a n u n d e r s t a n d i n g o n o u r p a r t t h a t m e x i c o i s t o r e c o n q u e r t h e l o s t t e r r i t o r y i n t e x a s , n e w m e x i c o , a n d a r i z o n a ! t h e s e t t l e m e n t i n d e t a i l i s l e f t t o y o u ! y o u w i l l i n f o r m t h e p r e s i d e n t o f t h e a b o k e m o s t s e c r e t l y a s s o n a s t h e o u t b r e a v o f w a r w i t h t h e u n i t e d s t a t e s o f a m e r i c a i s c e r t a i n a n d a d d t h e s u g g e s t i o n t h a t h e s h o u l d , o n h i s o w n i n i t i a t i k e , i n k i t e j a p a n t o i m m e d i a t e a d h e r e n c e a n d a t t h e s a m e t i m e m e d i a t e b e t w e e n j a p a n a n d o u r s e l k e s ! p l e a s e c a l l t h e p r e s i d e n t . s a t t e n t i o n t o t h e f a c t t h a t t h e r u t h l e s s e m p l o y m e n t o f o u r s u b m a r i n e s n o w o f f e r s t h e p r o s p e c t o f c o m p e l l i n g e n g l a n d i n a f e w m o n t h s t o m a v e p e a c e ! > cat(metrop$best.guess, fill = 60) w e i n t e n d t o b e g i n o n t h e f i r s t o f f e b r u a r y u n r e s t r i c t e d s u b m a r i n e w a r f a r e . w e s h a l l e n d e a v o r i n s p i t e o f t h i s t o k e e p t h e u n i t e d s t a t e s o f a m e r i c a n e u t r a l . i n t h e e v e n t o f t h i s n o t s u c c e e d i n g , w e m a k e m e x i c o a p r o p o s a l o f a l l i a n c e o n t h e f o l l o w i n g b a s i s : m a k e w a r t o g e t h e r , m a k e p e a c e t o g e t h e r , g e n e r o u s f i n a n c i a l s u p p o r t a n d a n u n d e r s t a n d i n g o n o u r p a r t t h a t m e x i c o i s t o r e c o n j u e r t h e l o s t t e r r i t o r y i n t e x a s , n e w m e x i c o , a n d a r i z o n a . t h e s e t t l e m e n t i n d e t a i l i s l e f t t o y o u . y o u w i l l i n f o r m t h e p r e s i d e n t o f t h e a b o v e m o s t s e c r e t l y a s s o n a s t h e o u t b r e a k o f w a r w i t h t h e u n i t e d s t a t e s o f a m e r i c a i s c e r t a i n a n d a d d t h e s u g g e s t i o n t h a t h e s h o u l d , o n h i s o w n i n i t i a t i v e , i n v i t e " a p a n t o i m m e d i a t e a d h e r e n c e a n d a t t h e s a m e t i m e m e d i a t e b e t w e e n " a p a n a n d o u r s e l v e s . p l e a s e c a l l t h e p r e s i d e n t ' s a t t e n t i o n t o t h e f a c t t h a t t h e r u t h l e s s e m p l o y m e n t o f o u r s u b m a r i n e s n o w o f f e r s t h e p r o s p e c t o f c o m p e l l i n g e n g l a n d i n a f e w m o n t h s t o m a k e p e a c e .

In this case, the Metropolis method seems to have found the better decoding. But that is not necessarily the point. The goal of the Annealing method is to avoid local maxima, and it was successful in that pursuit (as evidenced by the readability of the message).

I have had varying results with both methods, depending on parameter inputs. It is not clear which one is better of these two, and I do not have the computing power to benchmark them. In any event, there is probably some fine-tuning of the parameters that could be done in order to improve the efficacy of the decoding methods. They do seem to both come close to the correct message more often than the code in my last post.

This post is linked to on http://www.R-bloggers.com

R-bloggers.com offers daily e-mail updates about R news and tutorials about learning R and many other topics. Click here if you're looking to post or find an R/data-science job.

Want to share your content on R-bloggers? click here if you have a blog, or here if you don't.