Mastering the Art of Data Preparation I

Want to share your content on R-bloggers? click here if you have a blog, or here if you don't.

Without a solid foundation, you’ll have trouble creating anything of value. ~ Erika Oppenheimer

Although building models to predict the future outcome is the ultimate goal of any study, getting the data and preparing it for analysis is the foundation based on which the models are built. Although often neglected, this quintessential step is of paramount importance for the success of the analytical models build over it later.

The Model Building Process Flow now -:

- This section will focus on extracting the data from variate sources like files (Excel, CSV), web based sources like (XML, HTML), API’S (JSON) and RDBMS sources (MYSQL).

- We will be using R, an open source statistical package for this purpose. If you do not have R, please follow to link below to see how you can install it.

- http://web.cs.ucla.edu/~gulzar/rstudio/

Downloading Files from Web

While files can be downloaded manually and stored, it is easier to automate the process. We will use R to automate the download process for files.

getwd() #Gets you the current working directory

The file can be downloaded directly from the website as -:

#check if a folder/directory called data exists under the current working directory, if not, create one

if(!file.exists(“data”)) + dir.create(“data”)

#Specify the URL of the file i.e the address where the file is located and download it

>fileUrl<-”https://www.datazar.com/file/fa50d8235-d84a-45a8-834c-b3338fac8eb0" > data<- download.file(fileUrl,destfile = “./data/TitanicPasaangers.csv”)

Although data can be downloaded manually and saved, automating it will ensure, faster and organized download, moreover, then the code becomes reproducible as data need not be downloaded manually multiple times by different users.

Loading Files for Analysis

Excel Files

This is one of the most commonly used file formats. After downloading the data using the above steps, we need to load the data into R so that it is available for analysis.

Step 1 — Install the xlsx package

> install.packages(“xlsx”) #downloads the package xlsx > library(“xlsx”) #loads the required packages into R

Step 2 — Read the file

Passenger_Data_Excel<- read.xlsx(“./data/Titanic_Passengers.xlsx”, sheetIndex = 1 , header = TRUE) #Loading data into R

Step 3 — Displaying the records

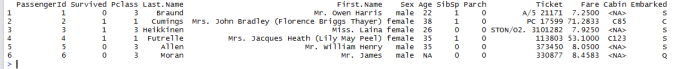

> head(Passenger_Data_Excel) # displaying top 6 records

XML Files

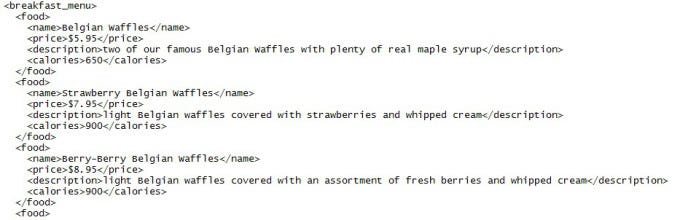

XML or, extensible markup language, is document formatting language used for some World Wide Web pages. It forms the basis of web scraping.

- We will use the following page to web scrap data from it.

- http://www.w3schools.com/xml/simplexsl.xml

#loading the requisite library

library(XML)

#Parses an XML or HTML file or string containing XML/HTMLcontent and generates an R structure representing the XML/HTML tree

document=xmlTreeParse(“http://www.w3schools.com/xml/simplexsl.xml",useInternalNodes = TRUE)

#This gives the root node of the document

rootNode <- xmlRoot(document)

# This displays all the tags under the parent node.

rootNode

Getting the values of specific attributes –

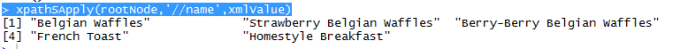

- Suppose we would want to know the names of all the products available in breakfast, we can use a special function called xpathSApply, which goes to every single value of elements inside the root node.

- However since we want the value of a specific tag called name, we specify the node name inside the function, so that we get the values only for those tags.

> xpathSApply(rootNode,’//name’,xmlValue)

JSON Data

JSON or Java script object notation is light weight compared to XML, also it is the most common format of data from Application Programming Interfaces. (API’s).

Extracting Json Data

Step 1- Install jsonlite package and load it into R and load it.

install.packages(“jsonlite”) library(jsonlite)

Step 2- Extract data from an API returning Json data using fromJSON function.

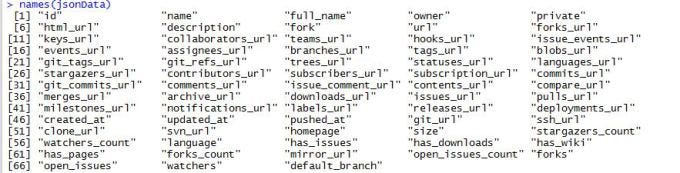

jsonData <- fromJSON(“https://api.github.com/users/pramit-uc/repos") #This contains a list of github repositories I contribute to.

Step 3 — See the name of the array elements returned as Json Data

names(jsonData)

Step 4- Access data within an array, For ex Suppose we want to check all the elements contained within “owner”.

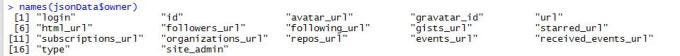

names(jsonData$owner)

Step 5- Access values of elements within an array.For example, if we want to get the list of users who had logged in.

jsonData$owner$login

Thank you, happy reading! Next week we will be back with the second part, where we will talk about how we can extract and prepare data from sources like SQL and HDF5.

Mastering the Art of Data Preparation I was originally published in Datazar Blog on Medium, where people are continuing the conversation by highlighting and responding to this story.

R-bloggers.com offers daily e-mail updates about R news and tutorials about learning R and many other topics. Click here if you're looking to post or find an R/data-science job.

Want to share your content on R-bloggers? click here if you have a blog, or here if you don't.