Latent class mixed models – with graphics

Want to share your content on R-bloggers? click here if you have a blog, or here if you don't.

Foreword

Apologies that the output isn’t showing well as far as spacing and alignment is concerned. Am working on fixing that!

Background

Generally when we have a set of data, we have known groupings. Be that three different treatment groups, two sex groups, 4 ethnicity groups etc. There is also the possibility of unobserved groupings within your data, some examples (I’m clutching at straws here) are those who are vegetarians and those who aren’t, those who regularly exercise and those who don’t, or those who have a family history of a certain condition and those who don’t (assuming those data were not collected). There is an approach to look into this.

Model and implementation

I’m no expert on anything, let alone the literature for this, yet. Terms used are Latent Class Analysis (LCA) and Mixture Modeling (MM) (sometimes Finite MM). From what I can tell these are largely the same, certainly they’re from the same ‘school’ of approaches to investigating this sort of occurrence within a data set. Reading the first few lines of the wiki page for LCA and MM would lead you to think that the differences are technical (minimal?). It would be nice if with that I’ve put my foot in it enough for someone to comment and provide a very clear, concise explanation of the differences!

It makes sense then given the above, that the R package that I am about to use is called ‘Latent Class Mixed Modeling’ LCMM. As you would expect, modeling of this nature is implemented in other software as well. LatentGold and Mplus are (paid) options, and there are implementations within most common statistics packages. Applying this methodology to longitudinal data can present further challenges. ProcTraj implemented through SAS is a popular option for this.

What I, personally, found clumsy in the both the ProcTraj and the Mplus environment, was how to handle data where not everyone’s observations were observed at the exact same time points. In tightly designed studies where all subjects are measured at baseline, 6 months then 12 months these other packages seem straight forward. However these packages do not seem intuitive in the way they handle this sort of data from an observational epidemiology setting, where people are diagnosed at different ages, people leave the study (area) or pass away and hence data collection ends at different times, or just if you chose a point in time from which to analyse your data hence subjects have differing amounts of data available.

My example

To see the lcmm package in action, we have the following data set:

> str(dat)

'data.frame': 43108 obs. of 10 variables:

$ id : chr "I072028" "I072028" "I072028" "I072028" ...

$ x : num 155 157 158 159 161 ...

$ x0 : num 155 155 155 155 155 ...

$ y : num 29.1 23.4 21.6 22.1 25.7 ...

$ rf1 : Factor w/ 2 levels "A","B": 2 2 2 2 2 2 2 2 2 2 ...

$ rf2 : chr "2003" "2003" "2003" "2003" ...

$ rf3 : Factor w/ 6 levels "pre 94","95-97",..: 4 4 4 4 4 4 4 4 4 4 ...

$ rf4 : num 0.134 1.474 2.278 4.02 5.628 ...

$ totobs: int 33 33 33 33 33 33 33 33 33 33 ...

$ x2 : num 24162 24580 24833 25385 25900 ...

> head(dat)

id x x0 y rf1 rf2 rf3 rf4 totobs x2

1 I072028 155.440 155.3336 29.12 B 2003 01-03 0.134 33 24161.59

2 I072028 156.780 155.3336 23.40 B 2003 01-03 1.474 33 24579.97

3 I072028 157.584 155.3336 21.58 B 2003 01-03 2.278 33 24832.72

4 I072028 159.326 155.3336 22.10 B 2003 01-03 4.020 33 25384.77

5 I072028 160.934 155.3336 25.74 B 2003 01-03 5.628 33 25899.75

6 I072028 162.408 155.3336 23.66 B 2003 01-03 7.102 33 26376.36

Here x is our independent variable (think age), y is our dependent outcome (say cholesterol levels), id is our id variable to identify which data comes from which subjects. rf1 and rf2 are two risk factors that we may look to incorporate at a later time and totobs lets us know how many observations each subject has.

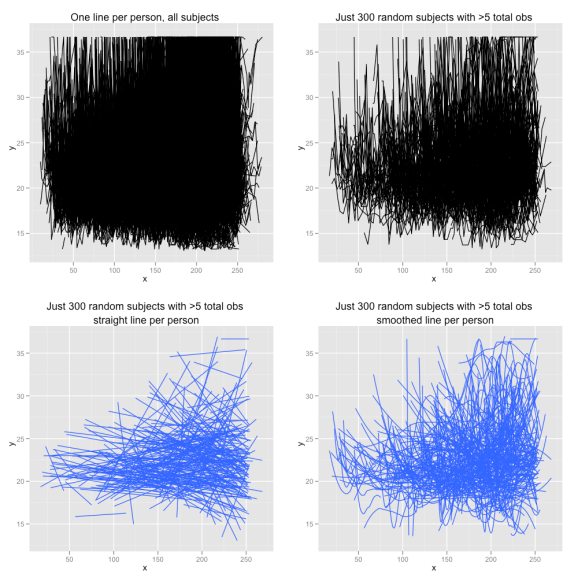

So with >2000 subjects and up to 80 time points per subject, where do you start? Well, let’s plot everyone’s data.

Some might say the first graph (top left) shows you nothing. I don’t. You can clearly see the top right portion is far denser than the top left. So more people finish with a higher value than start with a higher value. Interesting. The next three plots show just 300 random subjects with at least 5 time points (to avoid the clutter a bit). The top right is a plot of their raw data, bottom left is a straight line fit for each person, and the bottom right is a smoothed curve (loess) for each person, fitted using the default settings of geom_smooth() from the beyond awesome ggplot2 package. Removing this clutter even further highlights the increased spread at the right of the graph compared to the left.

Segway

I just wanted to quickly show (visually) how the loess smoothing algorithm works. Here are 3 subjects with a reasonable amount of data. This shows their raw (jagged) plot, their smoothed curve, and the two combined to highlight how the trend is accurately portrayed but the noise is removed to allow the eye to identify the path of the trend with ease.

Back on track

You can see all the input options for the lcmm function by looking at the pdf or using ?lcmm after loading the library. The user is able to specify how many latent class (unobserved groups) they would like the algorithm to find. I used the following code to find 2 in my data*.

d2 <-lcmm(y~x,random=~x,subject='id',mixture=~x,ng=2,idiag=TRUE,data=dummy,link="linear") summary(d2)

Here I am modeling the dependent y with the independent x, allowing x to vary per person (random slope) and using (linear) x as the variable in the mixture term. Below I will look at more appropriate models, am keeping it simple for now. In the output (below) we see the results from the main section of the summary. This highlights, with the ‘x 1′ and ‘x 2′ line, that each class has a different slope, one slightly positive and one slightly negative, with (see ‘intercept 2′) quite different intercepts.

Fixed effects in the class-membership model:

(the class of reference is the last class)

coef Se Wald p-value

intercept 0.4665299 0.1712701 2.723943 0.006450762

Fixed effects in the longitudinal model:

coef Se Wald p-value

intercept 1 (not estimated) 0.000000000

intercept 2 -4.476219941 0.2091687393 -21.400043 0.000000000

x 1 -0.002339034 0.0008328176 -2.808579 0.004976061

x 2 0.023911923 0.0011908951 20.078950 0.000000000

Goodness of fit statistics are also provide. Of interest is the posterior probabilities of group membership. Each subject is assigned a probability of belong to each (of the 2) class(es).

postprob(d2)

Posterior classification:

class1 class2

N 152.00000 93.00000

% 62.04082 37.95918

Posterior classification table:

--> mean of posterior probabilities in each class

prob1 prob2

class1 0.9330 0.0665

class2 0.0934 0.9070

We see one class has 62% of subjects and the other has 38%. We see that of those in class 1 their mean posterior probability of belonging to class 1 was 0.93 while their mean PP of belonging to class 2 was 0.09. Similarly, of those assigned to class 2, their mean PP of belonging to class 2 was 0.90 and 0.07 for belonging to class 1. We can look further at this with the code below. The minimums are close to 0.5 (somewhat expected), so we know that some subjects trajectories could have really been in either class. I take solace in both lower quartiles being >0.80.

> round(summary(as.numeric(d2$pprob[d2$pprob[,"class"]==1,"prob1"])),2)

Min. 1st Qu. Median Mean 3rd Qu. Max.

0.51 0.94 1.00 0.93 1.00 1.00

> round(summary(as.numeric(d2$pprob[d2$pprob[,"class"]==2,"prob2"])),2)

Min. 1st Qu. Median Mean 3rd Qu. Max.

0.54 0.83 0.99 0.91 1.00 1.00

Given we are dealing with trends and trajectories, we might like to visualise what this means for our data. Using the code below, we can pull out which class subjects were assigned to, and then plot their data, colouring based on class membership.

dummy$id <- as.character(dummy$id) people2 <- as.data.frame(d2$pprob[,1:2]) dummy$group2 <- factor(people2$class[sapply(dummy$id, function(x) which(people2$id==x))]) p1 <- ggplot(dummy, aes(x, y, group=id, colour=group2)) + geom_line() + geom_smooth(aes(group=group2), method="loess", size=2, se=F) + scale_y_continuous(limits = c(13,37)) + labs(x="x",y="y",colour="Latent Class") + opts(title="Raw") p2 <- ggplot(dummy, aes(x, y, group=id, colour=group2)) + geom_smooth(aes(group=id, colour=group2),size=0.5, se=F) + geom_smooth(aes(group=group2), method="loess", size=2, se=T) + scale_y_continuous(limits = c(13,37)) + labs(x="x",y="y",colour="Latent Class") + opts(title="Smoothed", legend.position="none") grid.arrange(p1,p2, ncol=2, main="2 Latent Class")

This produces the following graph.

Here we see what we wanted to see. For starters the lines when smoothing across all data within a class intersect, suggesting we are seeing a real effect of classes. I would not be too interested, myself, in seeing two parallel lines, as that would suggest everyone has the same ‘kind’ of trajectory, and I would suspect the classes to be purely an artifact of the algorithm having to ‘go out and find two classes’. In saying that, we knew from the coefficients that we would see something like this. What I particularly like is that we can see a group (class), class 2, who clearly rocket up at the end. When x hits 200, a vast majority of the subjects in class 2 rapidly increase, where as class 1 generally remain consistent (in y).

If we try 3 classes.

And 4 classes?

As far as choosing how many classes are appropriate, the generally accepted method is to use the BIC (as opposed to the AIC), with the lower BIC being the preferred model. With this data we get a BIC of 32941 for 4 classes, 32972 for 3 classes and 33084 or 2 classes. This suggests 4 classes offers a slightly better fit than 3. Actually truth be told, 5 classes achieves a slightly lower BIC again. However like all modeling, you have to have some human (or really really smart robot circa 2035) input. With 4 classes, the 4th class only holds 10% of subjects, in our reduced modeling subset of the data set, this is 25 people. You have to decide if this is appropriate for your data or not. Here I certainly wouldn’t go any lower than 10%.

Refining the model

As I mentioned earlier, a linear effect may not be the best way to model this data. Here is my preferred number of classes, 3, with a quadratic term introduced. This takes substantially longer to complete (converge). Compare this graph below, to the graph 2 above (for 3 latent classes). There are some subtle differences

As posterior probabilities, and percentage of class membership is concerned, the model with only the linear term and the model that also has the quadratic differ quite a bit. It looks like the quadratic term has a tougher time determining which class each subject falls into. See below.

Just linear term

Posterior classification:

class1 class2 class3

N 139.00000 59.00000 47.00000

% 56.73469 24.08163 19.18367

Posterior classification table:

--> mean of posterior probabilities in each class

prob1 prob2 prob3

class1 0.8800 0.08060 0.039600

class2 0.1390 0.86000 0.000981

class3 0.0887 0.00293 0.908000

> summary(as.numeric(d3$pprob[d3$pprob[,"class"]==1,"prob1"]))

Min. 1st Qu. Median Mean 3rd Qu. Max.

0.5024 0.8210 0.9402 0.8798 0.9931 1.0000

> summary(as.numeric(d3$pprob[d3$pprob[,"class"]==2,"prob2"]))

Min. 1st Qu. Median Mean 3rd Qu. Max.

0.5136 0.7642 0.9549 0.8597 0.9972 1.0000

> summary(as.numeric(d3$pprob[d3$pprob[,"class"]==3,"prob3"]))

Min. 1st Qu. Median Mean 3rd Qu. Max.

0.4962 0.8986 0.9898 0.9083 0.9994 1.0000

Quadratic term included

Posterior classification:

class1 class2 class3

N 110.00000 44.00000 91.00000

% 44.89796 17.95918 37.14286

Posterior classification table:

--> mean of posterior probabilities in each class

prob1 prob2 prob3

class1 0.7990 0.0237 0.1770

class2 0.0465 0.8670 0.0862

class3 0.1630 0.0482 0.7890

> summary(as.numeric(d32$pprob[d32$pprob[,"class"]==1,"prob1"]))

Min. 1st Qu. Median Mean 3rd Qu. Max.

0.3598 0.6565 0.8524 0.7992 0.9688 0.9999

> summary(as.numeric(d32$pprob[d32$pprob[,"class"]==2,"prob2"]))

Min. 1st Qu. Median Mean 3rd Qu. Max.

0.4284 0.7217 0.9847 0.8673 0.9997 1.0000

> summary(as.numeric(d32$pprob[d32$pprob[,"class"]==3,"prob3"]))

Min. 1st Qu. Median Mean 3rd Qu. Max.

0.4159 0.6348 0.7822 0.7890 0.9618 1.0000

However, the BIC actually reduces from 32972 to 32594 which is a much bigger difference than we saw by changing the number of classes. In this instance I would say a) further model exploration is required and b) if I had to, I would chose the model based on the linear term only, giving preference to the better posterior probability performance over the BIC performance.

Hopefully this has been useful as an introduction to latent class modeling and/or and introduction to the lcmm package and/or plotting and visualising longitudinal latent class mixture modeling.

Final note

I have been in contact with the author of this package, who early next year aims to enhance some of the features of the package.

*prior to running the analysis on the data from here on I limited the data set to a subset based on a few criteria, mainly to do with year and initial x value (x0). This was purely to eliminate known calendar and other effects effecting the efficiency of the algorithm. I can provide more information on this if contacted by anyone interested.

**********************************************************************************

Here is the script used in this example. I haven’t included the loading of the data as it was a data set from a project and I have subsequently applied a coefficient to the x and y variable so it is not obvious what they are, for obvious reasons. NOTE: I had to switch between the id column being a factor and character depending on which function I was running at the time. For the lcmm() it needed to be a factor, or the postprob() function would give warnings (not partitioning the data correctly), but then it needed to be a character for the sapply() function to work properly It also needed to be a character for the colour=id in ggplot to avoid the legend ‘blowing out’ with 2000 odd levels available (despite only 3 being plotted). I’m sure there was a better way to do this (probably re write the sapply(), I just ran out of time). Got a question on this, just ask.

Data used is available here. Use `load(“2011-10-04_lcmm_post_mc.rdata”)`.

R-bloggers.com offers daily e-mail updates about R news and tutorials about learning R and many other topics. Click here if you're looking to post or find an R/data-science job.

Want to share your content on R-bloggers? click here if you have a blog, or here if you don't.