Build your own Twitter Archive and Analyzing Infrastructure with MongoDB, Java and R [Part 1] [Update]

Want to share your content on R-bloggers? click here if you have a blog, or here if you don't.

UPDATE: The JAVA script is now also available with the streaming API. You can find the script on my github account

Hey everybody,

you sure know the problems which appear when you want to work with the Twitter API. Twitter created a lot of different restrictions minimizing the fun of the Data Mining process.

Another problem is that you Twitter provides no way to analyze your data at a later time. You can´t just start a Twitter search, which gives you all the tweets ever written about your topic. And you can´t get all tweets related to a special event for example if there are a lot. So i always dreamed of my own archive filled with Twitter Data. And then i saw MongoDB. The Mongo database, which comes from “humongous”, is an open-source document database, and the leading NoSQL database.

And this document oriented structure makes it very easy to use especially for our purpose because it all concentrates on the JSON format. And that´s of course the format we get directly from Twitter. So we don´t need to process our tweets, we can just save them into our database.

Structure

So let´s take a closer look at our structure

As you can see we need different steps. First we need to get the Twitter Data and store it in the Database and then we need to find a way to get this data into R and start analyzing.

In this first tutorial I will show you how to set up this first part. We set up a mongoDB locally on your computer and write a Java crawler, getting the Data directly from the Twitter API and storing them.

MongoDB

Installing the MongoDB is as easy as using it. You just have to go to the mongoDB website and select the right precompiled files for your operating system.

http://www.mongodb.org/downloads

Ok after downloading unpack the folder. And that´s it.

Then you just have to go to the folder and take a look into “bin” subfolder. Here you can see the different scripts. For our purposes we need the mongod and the mongo file.

The mongod is the mongo daemon. So it is basically the server and we need to start this script every time we want to work with database.

But we can do it even easier:

Just download the IntelliJ IDEA Java IDE http://www.jetbrains.com/idea/download/index.html

This cool and lightweight IDE has a nice third party mongoDB plugin available which will help you a lot working with the database. Of course there are plugins available for Eclipse or NetBeans but i haven´t tried them yet. Maybe you did?

Ok after you installed the IDE download the mongoDB plugin and install it as well http://plugins.jetbrains.com/plugin/7141

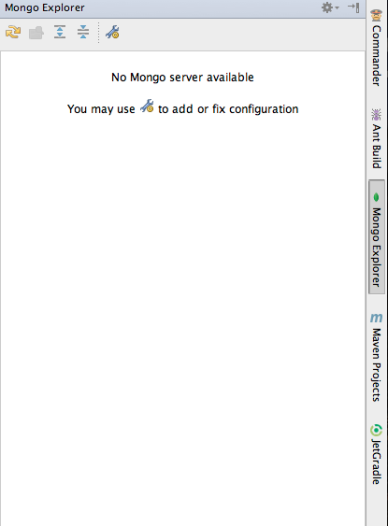

Then you can find the mongo explorer on the right side of your working space.

Go to the settings of this plugin.

There we have to add the path to the Mongo executable. Then you have to add a server connection by clicking on the + at the end of your server list. Just leave all the settings as they are and click ok.

![]()

Now we established the connection to our server. If you can´t connect, start restarting the IDE or start the mongod script manually.

That was basically the mongoDB part. Let´s take a look at our Java crawler.

Java

I will go through the code step by step. You can find the complete code on my github.

But before we start we have to download some additional jar libraries helping us to work with the mongoDB on the one hand and the Twitter API on the other hand.

MongoDB Java driver: http://central.maven.org/maven2/org/mongodb/mongo-java-driver/2.11.3/mongo-java-driver-2.11.3.jar

Twitter4j package: http://twitter4j.org/archive/twitter4j-3.0.4.zip

Now add the Twitter4j-core. Twitter4j-stream and the mongoDB driver to your project.

Our Java program starts with a small menu giving us the chance to insert the keyword we want to look for. So this Java program works like a loop searching Twitter every few seconds for new Tweets and saves new Tweets to our database. So it just saves tweets while it´s running but this is perfect for example to monitor a certain event.

public void loadMenu() throws InterruptedException {

System.out.print("Please choose your Keyword:\t");

Scanner input = new Scanner(System.in);

String keyword = input.nextLine();

connectdb(keyword);

int i = 0;

while(i < 1)

{

cb = new ConfigurationBuilder();

cb.setDebugEnabled(true);

cb.setOAuthConsumerKey("XXX");

cb.setOAuthConsumerSecret("XXX");

cb.setOAuthAccessToken("XXX");

cb.setOAuthAccessTokenSecret("XXX");

getTweetByQuery(true,keyword);

cb = null;

Thread.sleep(60 * 1000); // wait

}

}

So after the program received a keyword it connects to the database with connectdb(keyword);

public void connectdb(String keyword)

{

try {

initMongoDB();

items = db.getCollection(keyword);

//make the tweet_ID unique in the database

BasicDBObject index = new BasicDBObject("tweet_ID", 1);

items.ensureIndex(index, new BasicDBObject("unique", true));

} catch (MongoException ex) {

System.out.println("MongoException :" + ex.getMessage());

}

}

public void initMongoDB() throws MongoException {

try {

System.out.println("Connecting to Mongo DB..");

Mongo mongo;

mongo = new Mongo("127.0.0.1");

db = mongo.getDB("tweetDB");

} catch (UnknownHostException ex) {

System.out.println("MongoDB Connection Error :" + ex.getMessage());

}

}

The initMongoDB function connects to our local mongoDB server an creates a database instance called “db”. And here something cool happens: You can type in whatever name of the database you want it to be called. If this database doesn´t exit mongoDB automatically creates it just for you and you can work with it like nothing happened.

This effect also appears when we call the db.getCollection(keyword)

It automatically creates a Collection if it doesn´t exists. So no error messages anymore 😉

MongoDB is structured like:

DATABASE –> Collections —> Documents

You could compare a Collection to a table in a SQL Database and the Documents as the elements in this table; in this case our tweets.

But there also two very important lines of code:

//make the tweet_ID unique in the database

BasicDBObject index = new BasicDBObject("tweet_ID", 1);

items.ensureIndex(index, new BasicDBObject("unique", true));

Here we create an index in our database. So it just saves a tweet if the tweet_ID isn´t already in our database. Otherwise we would have doubled entries.

But let´s get some tweets!

If you take a look at our main function again it know creates a ConfigurationBuilder and sets the login details of our twitter API access. This configurationBuilder is needed for the TwitterFactory provided by the Twitter4j package we use in the next function: getTweetByQuery

public void getTweetByQuery(boolean loadRecords, String keyword) throws InterruptedException {

TwitterFactory tf = new TwitterFactory(cb.build());

Twitter twitter = tf.getInstance();

if (cb != null) {

try {

Query query = new Query(keyword);

query.setCount(100);

QueryResult result;

result = twitter.search(query);

System.out.println("Getting Tweets...");

List<Status> tweets = result.getTweets();

for (Status tweet : tweets) {

BasicDBObject basicObj = new BasicDBObject();

basicObj.put("user_name", tweet.getUser().getScreenName());

basicObj.put("retweet_count", tweet.getRetweetCount());

basicObj.put("tweet_followers_count", tweet.getUser().getFollowersCount());

basicObj.put("source",tweet.getSource());

basicObj.put("coordinates",tweet.getGeoLocation());

UserMentionEntity[] mentioned = tweet.getUserMentionEntities();

basicObj.put("tweet_mentioned_count", mentioned.length);

basicObj.put("tweet_ID", tweet.getId());

basicObj.put("tweet_text", tweet.getText());

try {

items.insert(basicObj);

} catch (Exception e) {

System.out.println("MongoDB Connection Error : " + e.getMessage());

//loadMenu();

}

}

} catch (TwitterException te) {

System.out.println("te.getErrorCode() " + te.getErrorCode());

System.out.println("te.getExceptionCode() " + te.getExceptionCode());

System.out.println("te.getStatusCode() " + te.getStatusCode());

if (te.getStatusCode() == 401) {

System.out.println("Twitter Error : \nAuthentication credentials (https://dev.twitter.com/pages/auth) were missing or incorrect.\nEnsure that you have set valid consumer key/secret, access token/secret, and the system clock is in sync.");

} else {

System.out.println("Twitter Error : " + te.getMessage());

}

}

} else {

System.out.println("MongoDB is not Connected! Please check mongoDB intance running..");

}

}

After creating our connection to Twitter we get some tweets. Then we save the content of these tweets to our Database. But we select what we want to save, as we don´t need all the information delivered by Twitter.

We loop through our tweets with the help of our tweets List. We put it all in a BasicDBObject with the help of Twitter4j and finally insert this object in our database if it doesn´t exist.

Then the program sleeps a few seconds and starts the whole loop again.

Settings and Usage

If you want to monitor an event you have two options you can adjust to your needs. You can change the time the loop waits and the number of tweets the searchTwitter functions return with every time.

So if you monitor an event which will create a huge amount of tweets, you can increase the number of tweets returned and decrease the time the loop waits. But be careful, because if the program connects to often, Twitter will deny the access.

So start monitoring some events or just random keywords by running the program and typing in our keyword. The program will automatically create a Collection for you where the tweets are stored in.

This was just the first step for building our own Twitter archive and analyzing structure. In the next part I will talk about how to connect with R to your Twitter database and start analyzing your saved tweets.

I hope you enjoyed this first part and please feel free ask questions.

If you want to stay up to date about my blog please give me a like on Facebook, a +1 on Google+ or follow me on Twitter.

Part 2

R-bloggers.com offers daily e-mail updates about R news and tutorials about learning R and many other topics. Click here if you're looking to post or find an R/data-science job.

Want to share your content on R-bloggers? click here if you have a blog, or here if you don't.